Offshore software development means building software with a team in another country, often with a different time zone and labor market. Offshore development can speed up delivery, access specialized talent, and improve cost-efficiency when local hiring is slow or expensive, but it only works well when you treat it as a strategic approach to distributed product engineering, with clear ownership, security controls, and measurable outcomes (not just “cheaper coding” or traditional outsourcing).

Done right, it also adds scalability without locking you into permanent headcount, while making better use of your internal resources (product leadership, architecture, and domain expertise).

Key takeaways

- Pick the right model: Onshore = control, Nearshore = real-time collaboration, Offshore = scalability + cost leverage (cost-effectiveness), Hybrid = balance (with more coordination).

- Country ≠ outcome: countries differ in time-zone fit and specialized talent. Validate the offshore team with a pilot, not assumptions.

- TCO > hourly rate: Rework, churn, and slow decisions often cost more than rates.

- Operating discipline is the differentiator: Clear ownership, written acceptance criteria, async-ready workflows, and a shared Definition of Done, supported by consistent tooling and infrastructure (CI/CD, environments, access controls).

- Measure what matters: cycle time, defect escape rate, rework rate (plus basic reliability/security controls).

- 2026 trends raise the bar: cloud-native delivery, platform engineering, AI-assisted SDLC with governance, data engineering, and shift-left security.

What is Offshore Software Development?

Offshore software development is a delivery model where you engage a software team in another country to build, maintain, or modernize software products. In practice, it’s a way to extend your engineering capacity beyond your local labor market, especially helpful when you need specialized skills (cloud, data engineering, DevOps, security, AI enablement) or you need to scale faster than local hiring allows.

Offshore development teams typically support work such as:

-

MVPs and proof of concepts (PoCs) (fast validation with controlled scope)

-

Product feature delivery (new modules, integrations, UX improvements)

-

Legacy modernization (re-platforming, refactoring, cloud migration)

-

AI integration (data pipelines, model serving, evaluation, MLOps patterns)

The best offshore software development setups feel like an extension of your product organization, with shared ways of working, shared quality standards, and explicit ownership, not a “handoff factory.”

A Comprehensive Look at the Offshore Industry

Offshore development continues to expand as organizations face:

- Local talent shortages (senior engineers, security, data/AI specialists)

- Rising total cost of hiring (salary rates + recruiting + retention + tooling + management overhead)

- Pressure to deliver faster (product releases, modernization timelines)

- Cloud adoption and platform complexity (more specialized engineering)

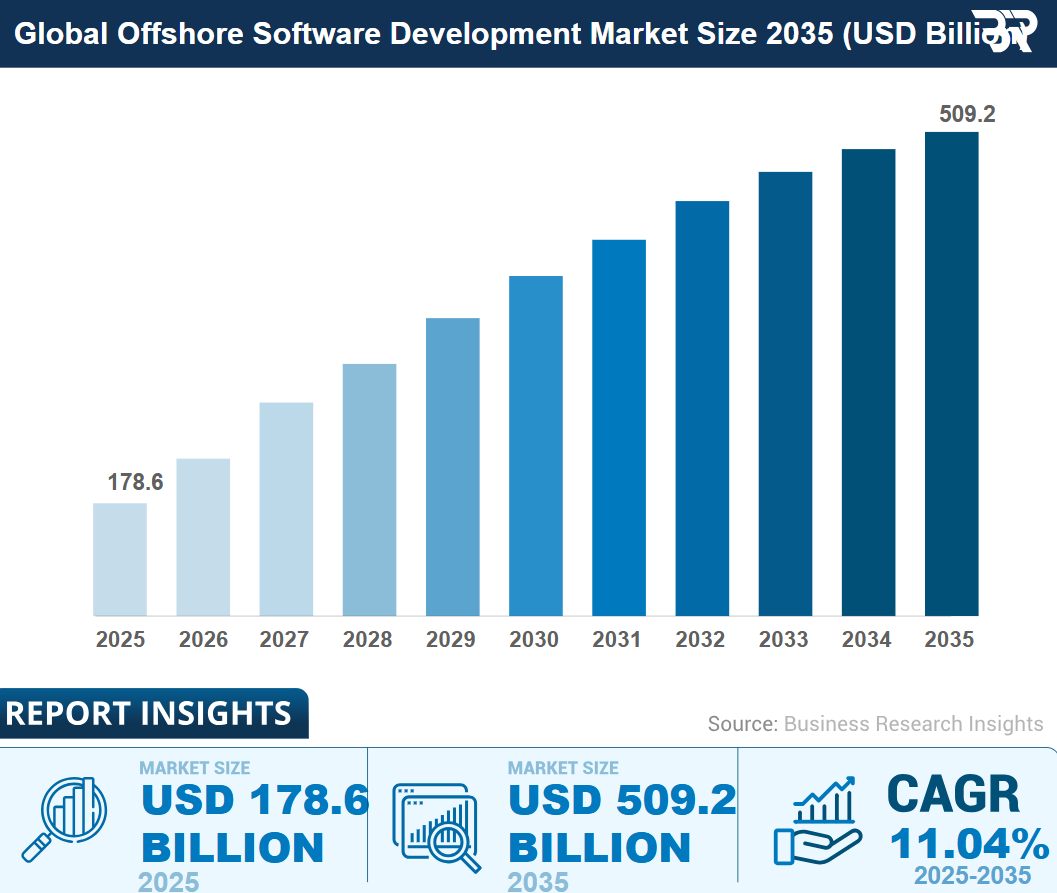

One market estimate puts the offshore software development market at ~$178.6B in 2025, growing to ~$198.3B in 2026, and projecting ~$509.2B by 2035 (methodologies vary by report, so treat these as directional).

For context, broader “software development outsourcing” estimates are also large; one report projects ~$564B in 2025 and ~$897B by 2030, reflecting the wider outsourcing category (not offshore-only).

What this means for decision-makers: offshore software development is no longer just a cost lever; it’s increasingly a capacity and specialization strategy for long-term growth, if you manage the risks associated with distributed delivery (slow decisions, security, and quality drift) and align offshore development execution with your internal operating model.

What are the Differences Between Onshore, Nearshore, Hybrid and Offshore Software Development?

These models differ mainly in cost structures, control, collaboration speed, and operational complexity. The “right” choice depends on your current collaboration model (how decisions, ownership, and communication actually work), and where you are in your business evolution (early discovery vs. scaling a stable product). It also depends on your geographic footprint, required time zone alignment, and whether you’re trying to scale one team or coordinate multiple teams.

| What it means | Main advantage | Main downside | Best when | |

| Onshore | Team in the same country | Tight collaboration & control | Highest cost; limited hiring pool | High ambiguity, sensitive systems |

| Nearshore | Team in neighboring countries / nearby time zones | Real-time overlap | Less cost leverage; smaller pools | Workshop-heavy delivery, fast iterations |

| Offshore | Team in distant regions | Cost + larger talent pools | Needs strong async + standards | Structured delivery, scaling capacity |

| Hybrid | Onshore leadership + near/offshore development execution | Balance of control and scale | Coordination overhead | Multiple workstreams, mature orgs |

Onshore Software Development

Onshore means the delivery team is located in the same country as the business.

Pros:

- Shared time zone and work culture context (fewer translation steps in decision-making)

- Faster feedback loops for ambiguous product work (early product development and discovery)

- Often simpler regulatory alignment (less cross-border handling)

Cons:

- Highest TCO

- Harder to scale quickly in competitive markets

- Specialized skills may still be scarce locally (even onshore)

Common pitfall: paying premium rates but still lacking clarity, so the budget goes to rework rather than progress. This is usually a governance problem, not a location problem.

Nearshore Software Development

Nearshore typically means teams operate in neighboring countries with meaningful time-zone overlap, i.e., better time zone alignment for real-time decision-making.

Pros:

- Better real-time collaboration than offshore software development (more synchronous working time)

- Often lower cost than onshore

- Easier occasional in-person planning for workshops (if you need it)

Cons:

- Smaller talent pools than the global offshore markets

- Cost advantages are narrowing in many nearshore hubs

- Still requires explicit delivery practices to avoid “meeting-driven” execution

Where it shines: discovery-to-delivery loops that require frequent synchronous workshops (product, UX, stakeholder reviews), especially when the work is ambiguous and changes weekly.

Hybrid Software Development

Hybrid combines locations: product ownership and key decisions stay close to the business, while execution is distributed across nearshore/offshore teams.

Pros

- Balanced control and scalability

- Keeps sensitive decision-making and domain context close

- Can enable continuous delivery across time zones (when designed intentionally)

Cons

- Higher coordination overhead (dependencies, handoffs, governance)

- Requires consistent tooling and standards across teams, or you’ll get “two engineering systems” that don’t mesh

What I’d do: use hybrid when you have a clear owner for (1) product decisions, (2) architecture, and (3) quality gates, otherwise hybrid becomes “everyone owns it, no one owns it.”

Top Benefits of Offshore Software Development

Offshore software development can help you deliver faster, access specialized skills, and scale engineering capacity, often at a lower total cost than expanding locally. The upside is real, but it only materializes when you have clear ownership, measurable quality assurance gates, and a collaboration rhythm that works across time zones.

1. More predictable total cost (not just “cheaper salaries”)

US compensation for software roles is high and volatile by region and seniority. But the real lever of offshore software development is typically cost efficiency through lower total cost of ownership (TCO), not just rate cards or developer hourly rates.

TCO (clear definition): the full cost to build, run, and change software over time, including:

- Recruiting and ramp time (your recruitment + onboarding drag)

- Churn/attrition impact

- Tooling and infrastructure setup (environments, CI/CD, access controls)

- Management overhead

- Rework (the biggest silent budget killer)

- Ongoing maintenance and support costs (incidents, upgrades, security patching, bug-fix load)

What I’d do in your position:

Build a simple TCO model with 3 lines: delivery cost + management time + rework cost. If rework is >15–20% of effort in early sprints, savings will evaporate, tighten acceptance criteria and quality gates before you scale.

Where “advanced technology” matters (practically):

Standardizing CI/CD, automated tests, and reproducible environments reduces rework and stabilizes TCO. This is also how you adopt new technologies safely, by making change repeatable, testable, and observable.

2. Access to a larger talent pool (especially for niche skills)

Many organizations turn to offshore software development because local hiring can’t keep up with demand. The U.S. Bureau of Labor Statistics projects about 129,200 openings per year (on average) for software developers, QA analysts, and testers over the decade, evidence of persistent demand pressure.

Some offshore regions also produce large numbers of IT students annually; for example, Vietnam is often reported at ~50,000–57,000 IT student enrollments per year (definitions vary).

Practical takeaway:

Offshore development can widen access to global talent, especially for niche expertise (cloud platform engineering, data engineering, security automation) and diverse skill sets across modern stacks (cloud, data, security, QA automation). But senior capability still needs verification.

What I’d do in your position:

Run a 4–6 week pilot focused on one “thin slice” feature and evaluate:

- system design trade-offs (can they reason under constraints?)

- code review quality (do reviews prevent real defects?)

- test strategy maturity (tests protect critical paths)

- ability to deliver custom software development (integrations, compliance constraints, performance budgets)

Common pitfall: “Senior in title only.” Your antidote is a paid pilot + clear Definition of Done + objective metrics.

3. Faster time-to-market (when onboarding is engineered)

Local hiring cycles can take months. Offshore software programs can move faster if onboarding is engineered, not improvised:

- Clear acceptance criteria (testable requirements)

- A stable backlog

- Fast environment provisioning

- Shared Definition of Done (what “complete” means)

Realistic example:

A common first win is shipping a “thin slice” feature (UI + API + tests) in 2–3 sprints, while your internal team focuses on roadmap, customer discovery, and architecture decisions.

Where “round-the-clock development” is real (and where it isn’t):

It works best for well-scoped items (bug fixes, test automation, incremental features) with clear acceptance criteria. It breaks down when requirements are ambiguous and decision latency creates churn.

Common pitfall: Speed collapses when teams build before requirements are testable. If you can’t write acceptance criteria, you’ll pay for rework.

4. Scalability and flexibility (without permanent headcount lock-in)

Offshore development can improve scalability and flexibility around launches, migrations, and peak season. The mistake is scaling people before scaling your delivery system.

What I’d do (scale in this order):

- Shared tooling (repo access, CI/CD, environments, secrets)

- Quality gates (tests, code review rules, release checklist)

- Collaboration rhythm (planning, async updates, demo cadence)

- Then add squads

Where hybrid development models fit:

Many mature orgs adopt hybrid development models (e.g., local product ownership + distributed delivery pods) to keep rapid decisions close to customers while scaling execution globally.

5. Risk reduction through stronger engineering discipline (if you enforce it)

Counterintuitive but true: offshore outsourcing can reduce risk when it forces you to formalize practices you should have anyway, this is how you reduce security challenges and stabilize delivery:

- automated testing

- continuous integration (CI)

- code review standards

- release checklists + observability (logs/metrics/traces)

Metrics to track (simple, executive-friendly):

- Cycle time: idea → production

- Defect escape rate: issues found after release

- Rework rate: reopened “done” work

- Time to restore: how quickly incidents are resolved

This is quality assurance in action: fewer escaped defects, fewer reopens, faster recovery, measured and visible.

Benefits-to-use-case map (quick decision table)

| Your priority | Offshore development helps most when… | Watch out for… | KPI to verify |

| Ship faster | backlog is stable and acceptance criteria are clear | rework from unclear requirements | cycle time trend |

| Fill skill gaps | you can validate senior capability early | “senior in title only” | review quality + defect escape |

| Scale capacity | your delivery process is repeatable | coordination overhead | throughput stability |

| Reduce TCO | you manage quality and churn | attrition + rework | rework rate + retention |

Best Offshore Development Destinations in 2026 (and how to choose)

Offshore software development can be a smart way to expand your global talent pool, increase flexibility, and protect core business activities, but only if the “destination” matches your collaboration needs and risk profile. In practice, the best offshore development choice is rarely a single country; it’s a pairing of collaboration model + team maturity + compliance posture.

A useful starting point is to think as location benchmarks do: compare destinations across multiple dimensions (cost, talent, business environment, digital readiness). Kearney’s Global Services Location Index is a well-known example of this kind of multi-metric approach.

| Destination | US overlap | EU overlap | AU overlap | Talent depth (AI/cloud) | English | Compliance readiness | Cost band | Best-fit model | Main risks |

| Vietnam | Low | Low | High | Medium (growing) | Medium (EF EPI: #64) | Medium | Low ($28–$45+ per hour) | Async-first squads; product dev + QA automation | Senior depth uneven → strong tech lead, strict code reviews, pilot |

| India | Low | Medium | High | Medium | Medium (EF EPI: #74 ) | Medium | Low ($25–$45+ per hour) | Scale programs; enterprise integration; 24/7 support | Vendor variance → role calibration, multi-stage interviews, measurable pilot |

| Philippines | Low | Low | High | Medium (role-dependent) | High (EF EPI: #28 ) | Medium | Low ($22–$40+ per hour) | Dev + support/QA hybrid; customer-facing ops | Niche depth varies → pair with another hub; clear scope |

| Poland (CEE) | Medium | High | Low | High | High (EF EPI: #15 ) | High | High ($45–$70+ per hour) | Real-time EU agile; complex/regulated systems | Higher cost → use for core systems, architecture-heavy work |

| Romania / Bulgaria | Medium | High | Low | Medium/High | High (EF EPI: #11 & #18) | High | High ($45–$65+ per hour & $30 & $55+ per hour) | EU overlap squads; full-stack + QA | City concentration → plan hiring timeline, retention plan |

| Portugal / Spain | Medium (US East) | High | Low | Medium/High | High (EF EPI: #6) / Medium (EF EPI: #36) | High | High ($37–$77+ per hour & $37–$75+ per hour) | Nearshore-style collaboration; stakeholder-heavy work | Talent competition → stable teams, strong onboarding |

| Mexico / Colombia (nearshore US) | High | Medium | Low | Medium | Low (EF EPI: #103 & #76) | Medium | Moderate ($40–$75 per hour & $35–$70+ per hour) | Real-time U.S. product squads | Scaling limits → bilingual leads, phased scaling, pilot |

| Brazil / Argentina (LATAM depth) | High | Medium | Low | Medium | Low (EF EPI: #75) / High (EF EPI: #26) | Medium | Moderate ($40–$70+ per hour & $35–$65+ per hour) | U.S.-overlap product squads; end-to-end delivery | Macro volatility → continuity plan, contracting, knowledge transfer |

| Singapore (hub, premium) | Low | Low | High | High | High | High | Very High ($70–$180 per hour) | Hub-and-spoke (SG leads, APAC builds) | Premium cost → use for architecture/governance, not bulk build |

1 . Vietnam – Fast-Growing Tech Hub

- Best for: offshore custom software development for web/mobile, cloud services, QA automation, and building a cost-efficient delivery pod in SEA.

- Strengths: strong cost-to-quality, fast ramp for mid-level talent, growing tech ecosystem (often clustered in software parks and special economic zones). Strong STEM system and good talent quality ensure a high-quality workforce. The country also benefits from increasing government support, favorable tax conditions, competitive exchange rates, and political stability (45.02 percentile).

- Watch-outs: senior/staff depth varies; security posture depends heavily on the vendor’s secure development environments and access controls.

- Practical guidance: require disciplined version control, code reviews, CI/CD, and dedicated QA departments for non-trivial products. Start with a lead+core squad, then scale.

2. India – Largest Talent Pool at Competitive Rates

- Best for: massive scalability, enterprise integration issues, platform engineering, and 24/7 maintenance and support costs optimization through follow-the-sun coverage.

- Strengths: broad talent pool across stacks (cloud-native technologies, containerization tools, data, generative AI/machine learning).

- Watch-outs: wide variance in outcomes and seniority consistency.

- Practical guidance: insist on role calibration (live coding assessments + architecture interviews), clear project management approaches, and a measurable pilot.

3. Philippines

- Best for: customer-facing roles, support + QA, and hybrid build/operate teams where English communication is a priority.

- Strengths: communication, service orientation, good fit for QA and operational roles.

- Watch-outs: deep specialization (certain backend/data niches) may be thinner than larger hubs.

- Practical guidance: pair with another hub for advanced engineering if needed; keep the Philippines team focused on QA, support, and well-defined components.

4. Poland (and CEE options) – High Talent Quality and EU Standards

- Best for: EU-friendly contracting, engineering rigor, fintech/enterprise systems, and nearshore-style delivery for EU teams.

- Strengths: strong ability to ship reliably, good overlap with EU time zones, strong security culture in many firms.

- Watch-outs: higher cost band than APAC; competition for top talent.

- Practical guidance: allocate CEE teams to high-impact areas (architecture-heavy services, core platforms, security-sensitive components).

5. Romania / Bulgaria (CEE alternative)

- Best for: solid full-stack plus QA with EU overlap at a more competitive cost than Western Europe.

- Strengths: good engineering fundamentals; often strong in automation and full-stack delivery.

- Watch-outs: talent can be concentrated city-by-city; hiring timelines can stretch for niche roles.

- Practical guidance: pick vendors with proven onboarding programs and low attrition evidence; plan a longer ramp for niche stacks.

6. Portugal / Spain (EU nearshore bridge)

- Best for: EU teams prioritizing cultural compatibility and collaboration; also workable overlap for U.S. East Coast.

- Strengths: strong collaboration, increasing startup/product maturity.

- Watch-outs: cost closer to Western Europe; talent competition in major cities.

- Practical guidance: use for product squads that benefit from tight stakeholder interaction and frequent sprint reviews.

7. Mexico / Colombia (nearshore to the U.S.)

- Best for: U.S. teams that need real-time daily stand-ups, rapid iteration, and tight feedback loops.

- Strengths: time-zone advantage; easier alignment for agile ceremonies; strong for product roadmap execution.

- Watch-outs: scaling limits by city/stack; language gaps can appear without bilingual leads.

- Practical guidance: require bilingual engineering leadership; keep requirements lean but explicit; invest in shared collaboration tools and documentation.

8. Brazil / Argentina (LATAM depth)

- Best for: product development with strong U.S. overlap; good engineering depth in major hubs.

- Strengths: strong talent in several ecosystems; good fit for end-to-end squads.

- Watch-outs: macro volatility and contracting complexity can affect continuity.

- Practical guidance: build continuity planning into the contract (backup staffing, knowledge transfer cadence, exit plan).

9. Singapore (regional hub, not “low cost”)

- Best for: regulated industries, architecture leadership, and coordinating distributed APAC delivery. PDPA is your baseline reference point.

- Strengths: high compliance maturity expectations; strong governance culture.

- Watch-outs: premium cost.

- Practical guidance: use Singapore as the “hub” (product ownership, security/privacy guidelines, solution architecture), with build capacity in other countries.

How to choose (what I’d do in your position)

If you’re a CEO/CTO choosing offshore development outsourcing in 2026, I’d make the decision in this order:

-

Time zone reality first. If you need daily real-time pairing, design reviews, and rapid iteration, you’ll want strong overlap (often LATAM for U.S., CEE for EU, SEA for AU/SG). If your organization is comfortable with async delivery (clear tickets, strong documentation, disciplined code reviews), you can optimize for a wider set of regions.

-

Delivery maturity beats “country reputation.” The biggest variance is usually vendor-to-vendor, not country-to-country. Ask for evidence: sample pull requests, CI/CD (continuous integration/continuous deployment) setup, QA and testing strategy, incident runbooks, and a clean project documentation repository.

-

Compliance posture is a gating factor. For EU-facing products, GDPR obligations apply regardless of where your offshore software developers sit. For Singapore, PDPA is your baseline; for Australia, the Privacy Act and Australian Privacy Principles matter. For security maturity, ISO/IEC 27001 is a widely recognized benchmark for an information security management system (ISMS).

(This is not legal advice—treat it as a practical screening lens.) -

Model the total cost, not just offshore software development rates. Developer hourly rates and “offshore developer rates by region” are only part of the story. The real cost drivers are usually communication overheads, rework costs, project management costs, legal/compliance costs, and long-term maintenance and support costs (plus the cost of technical debt when quality gates are weak).

2026 Outsourcing Country Comparison eBook – CEO Guide by Thanh Pham

There is no single “best” outsourcing country for offshore development. The right choice depends on talent depth, delivery maturity, cost structure, time-zone alignment, and risk tolerance. In 2026, Vietnam, India, the Philippines, and Poland remain among the most commonly evaluated options, but they serve different use cases, not interchangeable ones.

Cost, Pricing, and TCO (What Business Buyers Actually Need)

Offshore software development is often pitched as cost savings, but business buyers should manage it as a total cost of ownership (TCO) problem: delivery speed, quality assurance, security, and long-term maintenance and support costs matter as much as developer hourly rates. In offshore software outsourcing, the cheapest rate card can become the most expensive program if it generates rework, communication overheads, or technical debt.

Pricing models (what they mean in practice)

- Time & Materials (T&M): You pay for time spent. Best when scope evolves with the product roadmap. Requires strong governance, sprint reviews, and transparency in velocity.

- Fixed price (with change control): Best when requirements are stable and acceptance criteria are clear. Without disciplined change control, it becomes a conflict factory.

- Retainer / capacity-based: You “rent” a dedicated development team (or offshore development centre capacity). Great for steady throughput and scalability.

- Outcome-based: Works only when outcomes are measurable and controllable (rare in complex software). It can fail when dependencies and integration issues dominate.

What really drives TCO

In my experience, TCO is dominated by: onboarding time, attrition and backfills, quality concerns leading to rework costs, project management costs, and toolchains (CI/CD platforms, cloud services, collaboration tools). Add legal and compliance costs if you operate across US/EU/AU/Singapore requirements, plus the hidden cost of technical debt if code reviews and QA and testing are weak.

How to compare proposals apples-to-apples

Ask vendors to price the same baseline: role mix + seniority, expected throughput assumptions, and what’s included (dedicated QA departments, DevOps, security practices like ISO 27001-aligned controls, documentation, and support). Require a short pilot project to validate work quality before scaling.

Major Challenges of Offshore Software Development Projects

The biggest offshore project risks are coordination, communication, security, and quality drift. These are manageable if you set explicit operating rules and measure outcomes from the first sprint, especially when you’re working with remote teams across time zones.

1. Time-zone differences

In offshore development, decisions and feedback slow down when there’s little overlap across time zones. The hidden cost is communication overheads: more handoffs, more waiting, and more “lost context.”

How to address it (practical):

- Define 2–4 overlap hours for live decisions (not for daily status meetings).

- Make work “async-ready”: written specs, screenshots, short screen recordings.

- Use a “24-hour rule”: blocking questions from the offshore development team must be answered within one business day.

What I’d do:

- Run two weekly rituals: (1) planning (live) and (2) demo + decision review (live).

- Everything else is asynchronous, with written artifacts to reduce coordination overhead.

2. Language and cultural friction

Misunderstanding creeps in, especially around “done,” quality expectations, and urgency. This shows up as communication barriers, not because people aren’t capable, but because assumptions differ. In offshore software development setups, cultural differences can amplify small ambiguities into big rework cycles.

How to address it:

- Write acceptance criteria in plain English plus concrete examples.

- Use “definition checks”: ask the team to restate requirements in their own words before building.

- Maintain a shared glossary (especially for regulated domains).

Common pitfall:

Relying on meetings instead of artifacts. Meetings don’t scale; clear written specs do.

3. Scarcity in key specialties (senior talent is competitive everywhere)

Roles like cloud security, AI engineering, and platform SRE (site reliability engineering) can be hard to staff quickly, even offshore.

How to address it:

- Plan critical roles early (4–8 weeks ahead).

- Separate “must-have now” vs “can train” skills.

- Use a hybrid staffing shape: keep a small number of senior domain experts close to decision-making; distribute execution.

What I’d do:

In the pilot, require a senior engineer to produce one architecture decision record (ADR) and lead one design review. This quickly validates real capability in offshore development before scaling.

4. Security and legal risks (especially with sensitive data and IP)

Cross-border development increases exposure in offshore software development if access controls and auditability are not designed from day one, especially under data protection regulations (e.g., GDPR-style requirements) and sector-specific rules such as financial industry compliance. The practical risk to call out is data leakage: accidental exposure via logs, test datasets, screenshots, or misconfigured access.

Baseline controls that scale:

- Role-based access + least privilege

- Audited access to repos and environments

- Secrets management (no credentials in code)

- Dependency and vulnerability scanning

- Clear IP ownership terms (handled by legal counsel)

Security certifications (what to do with them):

If you use security certifications such as ISO/IEC 27001 language, treat it as a governance baseline for controls. Even without certification, you can implement the practices: access logs, review gates, incident response, and continuous improvement.

What I’d do:

- Keep production data access extremely limited; use masked or synthetic datasets by default.

- Require audit logs for who accessed what, when, and why.

5. Quality assurance (QA) drift

Quality slips when teams optimize for speed without automated checks. In offshore development, this risk increases when processes aren’t enforced uniformly across locations and contributors. You prevent this by making quality assurance enforceable in the workflow, not dependent on heroics.

Minimum viable quality system:

- Definition of Done includes tests, code review, and a rollback plan

- Automated unit + integration testing for critical paths

- Continuous integration checks must pass before the merge

- Code review checklist (security, performance, maintainability)

- Weekly defect review to identify root causes (not blame)

Version control (non-negotiable):

Use shared version control standards (branching strategy, required reviews, protected main branch). If “who changed what” is unclear, both speed and quality collapse.

What I’d do:

Make quality visible in one dashboard: cycle time + defect escape + rework. If defect escape rises, slow down and fix the pipeline.

Offshore Development Best Practices

Offshore software development succeeds when you treat it like distributed product engineering: clear outcomes, explicit ownership, disciplined communication, and automated quality/security checks. The #1 cause of failure isn’t geography, it’s unclear requirements and weak delivery governance built on shaky technical foundations. The fix is simple in concept: well-defined requirements, tight execution loops, and security-by-default.

How to Select the Right Offshore Delivery Setup (without turning this into a “vendor search”)

Instead of optimizing for “the best provider,” optimize for fit: the team’s ability to deliver your outcomes with your constraints (security, compliance, timeline, budget, time-zone overlap), plus realistic talent availability for the roles you need.

1. Clarify goals and requirements (make them testable)

Start with outcomes and constraints, not features, especially in offshore software development, where ambiguity quickly turns into rework.

A practical format (works better than long spec docs):

- Business outcome: what changes for users or revenue/cost?

- Scope boundaries: what’s explicitly out of scope?

- Non-functional technical requirements: performance, availability, privacy, auditability

- Acceptance criteria: “how we know it’s done”

- Success metrics: 2–3 KPIs you can measure in 6–10 weeks

You can use SMART (Specific, Measurable, Achievable, Relevant, Time-bound) for the outcome and success metrics. The key is that requirements are verifiable (a tester can confirm them).

What I’d do in your position:

Include one “technical foundations” section in the first spec: environments, CI expectations, branching, observability, and how releases happen. It prevents early chaos that later looks like “offshore issues.”

2. Validate engineering capability with artifacts (not promises)

Don’t rely on testimonials alone. Ask for evidence of how they build and how they control quality.

What to request:

- Sample architecture decision record (ADR) (1–2 pages)

- One “Definition of Done” checklist

- A code review checklist

- A sample test strategy (unit/integration/e2e)

- One sample incident postmortem template

- Relevant portfolio item similar in complexity (regulated domain, integration-heavy, high availability)

Add assessment steps that predict real performance:

- Coding assessments aligned to your stack (small, time-boxed, production-like)

- Multi-stage technical interviews (one system design, one code review, one debugging)

- Include solution architects in the system design round to test trade-offs under constraints

What I’d do in your position:

Run a pair-review session: give a small PR (pull request) and ask how they’d review it (security, performance, maintainability). This reveals seniority fast and whether their quality control measures are real or aspirational.

3. Choose a delivery model that matches your operating maturity

Define acronyms once, in plain English:

- Staff augmentation: individuals join your team

- Dedicated team: a stable cross-functional team that owns a workstream

- Offshore development center (ODC): a longer-term extension of your delivery org

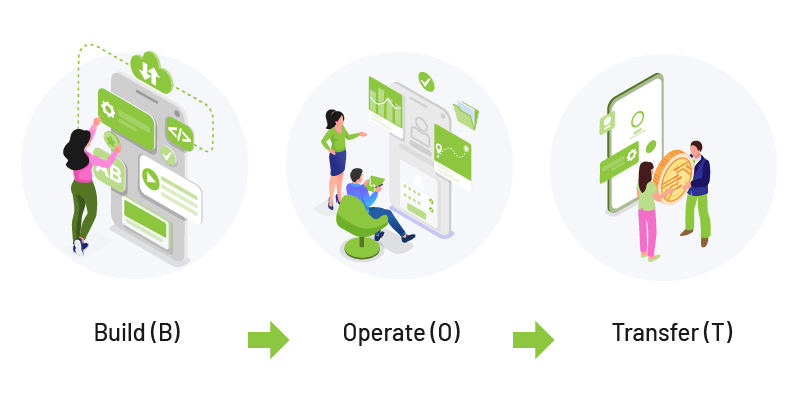

- BOT delivery method (build–operate–transfer): start with an external setup, then transfer into your ownership later

Rule of thumb:

You have strong internal product + engineering leadership → staff augmentation can work well.

If you want stable velocity with less churn → dedicated squads.

If you want long-term capacity building → ODC or BOT (define transfer criteria upfront).

Where a pilot helps:

Use a pilot project approach before scaling headcount. It turns “claims” into measurable delivery: velocity, defect escape, and decision speed under your real constraints, plus it tests your shared project management methodologies in the real world.

4. Confirm communication and decision-making speed

Offshore software development fails when decisions stall, often due to unclear ownership more than time zones.

Non-negotiables to align:

- Overlap hours for decisions (e.g., 2–4 hours/day)

- “Blocking questions answered within 24 hours”

- One accountable product decision-maker

- One accountable engineering quality owner

- Clear communication rules: what belongs in tickets vs chat vs docs

Quick diagnostic question:

“If a requirement changes mid-sprint, who decides, and how fast?”

If the answer is unclear, expect rework.

What I’d do in your position:

Set up a lightweight collaboration hub: one place where decisions, ADRs, key links, and “how we work” rules live (Notion/Confluence is fine). Pair that with explicit collaboration tools and communication channels rules:

- Jira/Linear = source of truth

- Slack/Teams = fast clarifications

- Docs = decisions, ADRs, runbooks

- Demos = proof of working software

To keep alignment tight, require transparent workflows: decisions recorded, assumptions explicit, and progress visible at all times.

5. Security and compliance: set baseline controls early

In offshore development, if you handle sensitive data, you need a minimum security posture from day one. ISO/IEC 27001 is a common information security management standard used as a baseline for controls and auditability.

Minimum controls (even without formal certification):

- Least-privilege access + audit logs (access control, and data protection)

- Secrets management (no credentials in code)

- Dependency vulnerability scanning

- Secure SDLC checks in CI (linting, SAST where appropriate)

- Clear IP ownership and confidentiality terms (legal-led)

Contract hygiene (keep it plain English):

- NDA + IP assignment + confidentiality clauses

- Clear legal protections for data handling, breach notification, and subcontractor limitations

- Confirm regulation compatibility for your market (GDPR-style requirements, sector rules)

Data handling practices to require

- Encrypted data storage for sensitive artifacts and backups

- Defined encryption procedures (at rest + in transit)

- Explicit “no production data in dev” rule, with masked/synthetic datasets by default

- Documented cybersecurity incident response process

Trade-off to be explicit about:

Stronger controls can slow the first sprint; they usually speed up everything after by reducing security rework and incident risk.

How to Manage an Offshore Team Effectively

So you’ve got a partner. Managing an offshore team effectively is what unlocks outcomes in offshore software development through a disciplined operating rhythm, clear ownership, and repeatable execution.

1. Share mission, context, and “why” (not just tasks)

Offshore teams deliver better when they understand:

- user personas and pain points

- the product roadmap and priorities

- what “good” looks like (quality and UX standards)

- Practical move: record a 10-minute “product context” video and update it quarterly.

Add cultural compatibility without hand-waving:

Instead of generic “culture fit,” define cultural compatibility as observable behaviors: escalation comfort, how disagreement is handled, clarity in writing, and comfort with ambiguity. Test these during a pilot; this matters more than location in offshore development.

2. Build a “one team” operating rhythm

Avoid vague cultural advice; use repeatable rituals that scale with an offshore team.

Weekly

- Planning (decisions + scope)

- Demo/review (show working software)

- Risk review (top 3 risks and mitigations)

Daily (async-first)

- Written standup: Yesterday / Today / Blockers

- Decision log updates (so context isn’t lost across time zones)

What I’d do in your position:

Make one senior engineer responsible for documenting decisions and onboarding. In offshore software development, this is a core knowledge-transfer role, not an afterthought.

3. Communication stack: choose channels on purpose

Use fewer tools, with clear rules:

- Jira / Linear: source of truth for work

- Slack / Teams: quick clarification (but decisions go to the ticket)

- Docs / Notion / Confluence: requirements, ADRs, runbooks

- Recorded demos: reduce meeting load

Common pitfall: decisions made in chat and never captured, which causes repeat debates and inconsistent builds.

4. Apply disciplined project management (without bureaucracy)

You don’t need a heavy process; you need clarity.

Keep project management lightweight but explicit:

- roles and responsibilities (RACI is fine if kept small)

- dependency management

- a definition of “ready” and “done”

- capacity planning (don’t overload sprints)

Make ownership visible

- Name a project manager (client-side or delivery-side) accountable for milestones, risks, and clarity

- Use weekly progress updates tied to outcomes, not activity

Use project management tools intentionally

- Roadmap + sprint boards for visibility

- Risk register (simple list)

- Decision log (short, consistent)

Milestones and deliverables

Tie work to explicit milestones and verifiable deliverables (demoable software, test results, release notes), not “hours consumed.”

5. Quality assurance from day one (build it into the pipeline)

Quality is cheapest when it’s automated.

Minimum Definition of Done (DoD) checklist

- Acceptance criteria met and demo recorded

- Code reviewed (security + maintainability)

- Automated tests added/updated (critical paths)

- CI green (build, lint, tests)

- Logging/monitoring updated where needed

- Rollback plan documented for risky changes

Where Agile/Scrum fits (plain English)

Use Agile and scrum as a delivery cadence (plan → build → review → improve), not as a ceremony checklist. If rituals don’t change decisions or outcomes, cut them. If you do keep a daily touchpoint, prefer async written updates; use daily stand-up meetings only when there’s active cross-team blocking work.

Tools are optional; outcomes aren’t. Whether you use Postman, JMeter, Appium, or alternatives, the goal in offshore software development is consistent, repeatable quality, not process theater.

Top Offshore Software Development Trends in 2026

In 2026, offshore software development is less about “finding cheaper developers” and more about running a distributed engineering system end to end. Modern offshore development now depends on cloud foundations, platform engineering, AI-assisted workflows, data and analytics capability, and supply-chain security. The winners are teams that can prove outcomes with repeatable practices and measurable delivery metrics.

1. Enterprise stacks remain the default (Java, .NET, Python, TypeScript/Node)

Most organizations continue to build on proven stacks because they reduce hiring risk and operational complexity. Stack Overflow’s survey data consistently keeps JavaScript/TypeScript and Python near the top in usage.

Why it matters for buyers:

Standard stacks make it easier to:

- swap/scale teams without rewriting everything

- maintain long-lived systems

- integrate with common enterprise tools (identity, observability, data platforms)

What I’d do in your position:

Ask your offshore partner for a thin-slice pilot in your target stack (one feature end-to-end, with tests). You’ll learn more from how the team handles code review, automatic testing, deployment, and code optimization than from any slide deck.

2. Cloud-native by default (Kubernetes + GitOps + multi-cloud pragmatism)

Kubernetes adoption is now mainstream: CNCF research reports 82% of organizations running Kubernetes in production (up from 66% in 2023), and GitOps principles are widely adopted in the same research.

How to apply this (practically):

– Treat cloud-native technologies and cloud-native architecture as a set of operating practices, not a buzzword:

- infrastructure as code (IaC)

- automated deployments

- monitoring + alerting + runbooks

- rollback strategy

Questions to ask (evidence-based):

- “Show me your deployment pipeline and rollback steps.”

- “How do you manage secrets and environment configs?”

- “What’s your incident response process?”

This is where process automation pays off: fewer manual releases, fewer human errors, faster recovery.

3. Platform engineering becomes a delivery multiplier (IDPs, golden paths)

Leadership teams are investing in platform engineering to reduce developer friction and standardize delivery. Gartner has identified platform engineering and AI-augmented development among key software engineering trends.

Plain-English definition:

A platform team builds an Internal Developer Platform (IDP), shared templates, pipelines, environments, and “golden paths” so product teams ship safely without reinventing tooling each time.

What good looks like (measurable):

Track DORA-style delivery outcomes (speed + stability), such as deployment frequency, lead time for changes, change failure rate, and time to restore.

4. AI is woven into the SDLC (but governance determines whether it helps)

GenAI use is becoming normal across functions; McKinsey reported 65% of respondents saying their orgs regularly use gen AI (in an early-2024 survey).

At the same time, research from DORA highlights that AI’s impact on delivery performance depends heavily on how it’s introduced and governed, especially as teams adopt large language models (LLMs) for code and documentation.

Practical uses that actually help:

- AI-powered tools for documentation drafts (ADR/runbook drafts), code comprehension, and test suggestions

- AI-driven testing platforms for smarter test selection, failure analysis, and flaky-test triage (with human oversight)

- AI-assisted code review prompts (security reminders, edge-case checks, not auto-approval)

Governance checklist (non-negotiable):

- human review remains accountable for merges

- secure handling of sensitive code/data

- auditability: what was generated, reviewed, and accepted, and why

5. Data engineering and “analytics readiness” become table stakes

Teams are building stronger data pipelines to support analytics, personalization, and AI features. In 2026, this also increasingly includes operational data from IoT products (device telemetry, edge events) and selective provenance/audit needs where blockchain is used for traceability in multi-party workflows.

What to verify early (before you scale):

- Ownership of data contracts (schemas, versioning)

- Observability for pipelines (freshness, latency, failure alerts)

- Access controls and audit logging for sensitive datasets (cybersecurity and data protection)

- Performance discipline: code optimization for pipeline hotspots (heavy transforms, high-volume ingestion, streaming joins) so costs and latency don’t spiral as usage grows

Realistic example (what I’d do): Start with one “thin slice” analytics use case (e.g., one customer journey funnel or one device-health dashboard). Define the source events, contract versions, SLA for freshness/latency, and a rollback plan for schema changes. This surfaces data quality issues before you scale teams or add more features.

Common pitfall: “AI feature” plans without data quality and lineage. Your model will reflect your mess, especially when IoT telemetry is noisy or when blockchain-style audit trails exist but aren’t connected to a reliable operational data model.

6. Security and compliance shift left (GDPR, ISO 27001, SBOM, provenance, SSDF)

Security is increasingly a procurement requirement, not a “later” task, especially in regulated industries and cross-border development.

Simple definitions:

- SBOM (Software Bill of Materials): a formal record of components used to build software.

- SLSA: a framework/checklist to improve build integrity and prevent tampering.

- NIST SSDF (SP 800-218): a set of secure development practices you can integrate into any SDLC.

- GDPR: EU data protection rules; when personal data is transferred outside the EU, protections must “travel with the data” via approved mechanisms.

- ISO 27001: a widely used standard for an Information Security Management System (ISMS).

- EU AI Act (timeline note): if your product uses AI and you serve the EU, plan governance and documentation early, the majority of rules apply from 02 Aug 2026 (with some obligations applying earlier/later).

What “shift left” should look like in practice

- CI gates: dependency scanning + security checks

- signed artifacts/provenance for critical releases

- least-privilege access + auditable logs

- documented cybersecurity protocols (incident response, access reviews, patch SLAs)

- multi-layer security frameworks: identity + network controls + secrets management + monitoring (not one control pretending to do everything)

Conclusion

Offshore software development can be a strategic advantage in 2026, but only if you run it as a disciplined engineering system. The winners are not the companies that find the lowest rates. They’re the companies that:

- choose the right delivery model for their decision speed and clarity,

- validate capability with real artifacts and a paid pilot,

- enforce quality and security gates through tooling,

- measure delivery health with a small set of meaningful metrics,

- and scale teams only after the delivery system is stable.

FAQs

1. Does cheaper offshore work automatically mean lower quality?

No. In offshore software development, cost differences usually come from local wage markets and overhead, not from “worse engineering.” In other words, “cheap” can reflect exchange rates and local tax policies as much as engineering capability. Quality depends on engineering leadership, standards, and governance, and whether the offshore team can consistently hit your cost-to-quality ratio target (cost savings without quality drift).

What determines quality in practice

- Clear acceptance criteria (reduces rework)

- Code review discipline (catches defects early)

- Automated testing + CI gates (prevents regressions)

- Stable team continuity (domain knowledge compounds)

Common pitfall: Choosing purely on the lowest rate and then discovering that rework, delays, and churn erase the savings.

What I’d do: Require a pilot that produces working software + tests + release evidence. If a team can’t show quality artifacts early, scaling won’t fix it.

2. How do we reduce language and cultural friction?

Use written-first communication and make expectations explicit. This isn’t about “perfect English”, it’s about English proficiency sufficient for precise technical writing and fast clarification, plus disciplined, clear communication that reduces ambiguity.

Practical actions that work

- Write requirements as user stories + acceptance criteria + examples

- Ask for “playback”: the team restates the requirement in their own words before building

- Use a shared glossary for domain terms (especially regulated industries)

- Capture decisions in tickets/docs (don’t leave critical decisions in chat)

Common pitfall: Relying on meetings to compensate for unclear documentation. Meetings don’t scale; clear written artifacts do.

3. How do we collaborate across time zones without slowing down?

Design for async by default, and reserve live time for decisions and demos.

A simple rhythm

- Daily: async update (Yesterday / Today / Blockers)

- Weekly: live planning + live demo/review

- Always: decision log (what changed, why, who approved)

What I’d do: Establish 2–4 overlap hours for decision-making, not status reporting. Everything else should be runnable without waiting.

4. How do we handle security and compliance in offshore delivery?

Use a baseline security model that’s auditable and enforce it through tooling, access control, and contracts.

Plain-English baseline controls

- Least-privilege access to repos and environments

- Audit logs for access and deployments

- Secrets management (no credentials in code)

- Vulnerability scanning for dependencies

- Secure coding practices and review checklists

Standards you may hear (simple explanations)

- ISO 27001: an information security management system framework (controls + auditability)

- SOC 2: assurance report focused on security and operational controls

- GDPR: EU privacy regulation (personal data handling)

- HIPAA: US healthcare data requirements (if applicable)

Common pitfall: Assuming security is handled because someone mentions a standard—always ask how controls are applied day-to-day (access, logging, scanning, incident response).