An AI coding assistant is an IDE-integrated tool. Powered by large language models, it can suggest code, explain snippets, generate tests, and accelerate reviews. During the code generation process, the developers take control. From simple helpers, these assistants become a powerful AI coding agent for streamlining development tasks.

AI coding assistant tools are popular now. According to the 2025 Stack Overflow Developer Survey, 84% of developers use or plan to use AI tools in their workflow. 51% of professional developers say they use such tools daily.

On the vendor side, GitHub Copilot leads with 1.8 million paid subscribers and 77,000+ enterprise customers (FY2024). Many developers consider it the best AI for coding. Meanwhile, alternatives like Gemini are emerging. Developers now have multiple powerful options for their jobs. Check out this article to dig into the trend and find the best tool for your project!

What is an AI Coding Assistant?

An AI coding assistant is software powered by large language models (LLMs) that helps developers write, understand, and maintain code. AI coding tools integrate directly with your editor and toolchain for code generation. You can also explain errors, work on code review, and automate repetitive changes. In many teams, the AI code completion tools work like a virtual pair programmer. Since the developer controls everything, AI coding assistants enhance productivity without replacing humans.

How it works:

- Understands your intent: You type a comment, natural-language question, or prompt.

- Reads context: The system reviews your open files and indexed parts of your repo, docs, or logs.

- Proposes actions: It suggests inline completions, patches, refactors, or step-by-step fixes. You decide to accept, edit, or reject the suggestions.

Learn more: Generative AI Integration Solutions

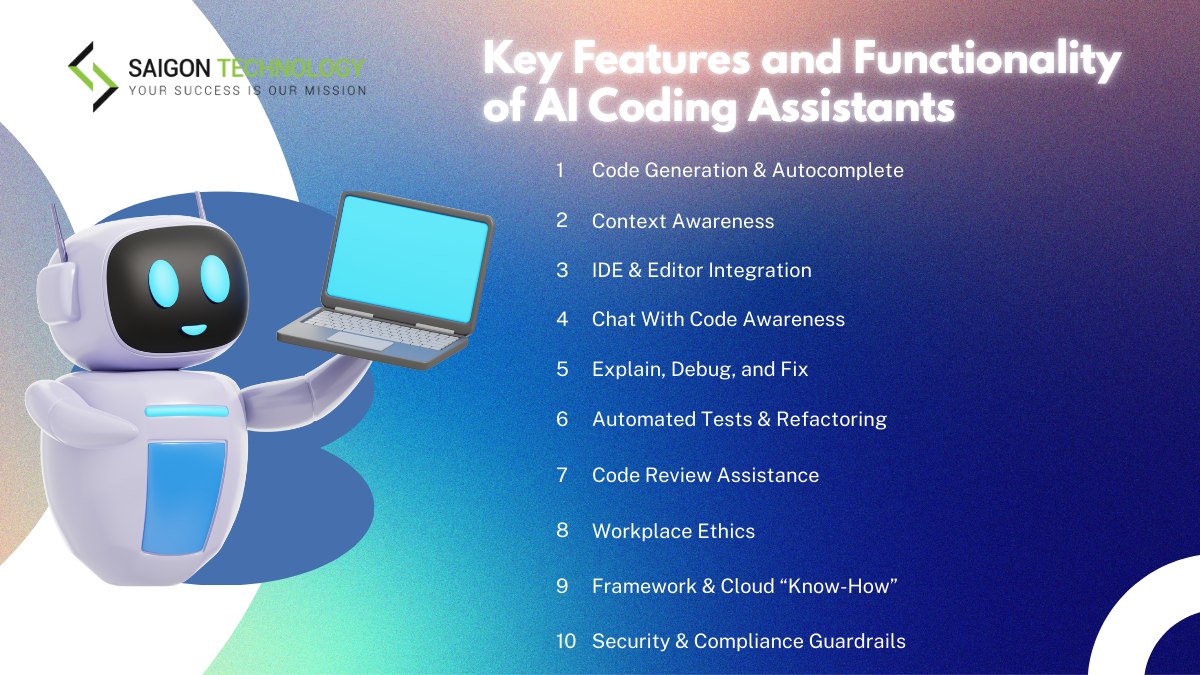

Key Features and Functionality

AI for coding refers to software tools powered by artificial intelligence. Developers use it to write, understand, and maintain code more efficiently. Here is what AI code generation can do, why it matters, and how to judge each feature.

1) Code Generation & Autocomplete

An autonomous coding agent offers predictive suggestions at the inline, block, or file level. The AI coding assistant can also scaffold functions, classes, tests, and configs to streamline development.

- Why it matters: Developers use AI code generation to cut down boilerplate and context switching. It also speeds up prototyping and allows for more advanced multi-agent workflows.

- What good looks like: You have fast, low-latency suggestions (<300 ms perceived) thanks to AI coding assistant tools. The acceptance rates are high, and the outputs match your coding style.

- How to evaluate: Check for language and framework coverage, and latency with large files. Review repo-level acceptance rates. See if you can toggle between aggressive and conservative suggestions.

2) Context Awareness (RAG over your code)

An AI code generation system uses your repository, docs, and issue trackers to drive context-aware code completion and edits.

- Why it matters: Context awareness reduces hallucinations. AI code completion can ensure that changes align with your APIs and coding patterns.

- What good looks like: You have a multi-repo context and support for custom libraries. At the same time, the result honors monorepo structure.

- How to evaluate: Check the sources indexed, like code, READMEs, ADRs, and wikis. Also, look at context window size and refresh cadence. Private context isolation is another important criterion.

3) IDE & Editor Integration

IDE integration means that your AI coding assistant can work with popular IDEs like VS Code, JetBrains, Android Studio, and Neovim.

- Why it matters: You can stay in flow. No need to switch between the browser and editor when using AI code completion.

- What good looks like: Extensions are stable with minimal CPU/RAM impact. You have a keyboard-first UX and accessible commands, too.

- How to evaluate: Check for feature parity across IDEs and confirm offline fallback. Then, review how it handles conflicts with other extensions.

4) Chat With Code Awareness

The side-panel AI-powered chat can be grounded in your open files, git diffs, and project docs. It extends the AI coding agent beyond following commands.

- Why it matters: Developers use the AI coding assistant for faster Q&A. This way, they improve onboarding and instant code tours.

- What good looks like: You have click-to-insert edits and file/line references. There are also links to get back to the sources.

- How to evaluate: Check for traceable citations and reproducible steps. Verify the ability to attach logs and snippets safely.

5) Explain, Debug, and Fix

AI code generation tools support natural language commands, stack-trace analysis, and patch proposals.

- Why it matters: Developers can shorten MTTR and flatten the learning curve for new codebases. Another goal of AI code completion is to improve error and security findings detection during fixes.

- What good looks like: You get patches that compile. Tests pass and diffs are readable.

- How to evaluate: Success rates depend on real incidents and the quality of suggested logs/asserts.

6) Automated Tests & Refactoring

AI assistants provide automated test generation for unit and integration tests. This way, the tools support safe code refactoring like rename, extract, and decompose with validation.

- Why it matters: You use automated tests to increase coverage and reduce regression risks. AI improves the maintainability of large codebases as well.

- What good looks like: You have framework-aware support for Jest, JUnit, and PyTest. The test names are deterministic, and the flakiness is minimal.

- How to evaluate: Check coverage delta per PR and improvements in mutation-testing scores. Review the size and readability of reflector diffs.

7) Code Review Assistance

An AI code helper summarizes PRs and flags risks. AI code review covers other review tasks like suggesting edits and enforcing style guides.

- Why it matters: Thanks to code review assistance, developers can speed up reviews. Standards remain consistent across teams.

- What good looks like: You have actionable comments with code examples. The code review features respect repo rules.

- How to evaluate: Check for false-positive rate and the ability to learn from prior merges. Review how well it integrates with security linting.

8) Framework & Cloud “Know-How”

An AI coding assistant is aware of AWS, GCP, and Azure SDKs. It also understands Android and iOS frameworks, infra-as-code, and CI/CD.

- Why it matters: Developers use AI to make safer cloud calls and generate correct scaffolding. Infrastructure can then stay aligned with best practices.

- What good looks like: You have up-to-date API usage and least-privilege access patterns. Plus, you can preview infrastructure diffs.

- How to evaluate: Assess version pinning and deprecation handling. Next, check IaC plan previews for Terraform and CloudFormation.

9) Security & Compliance Guardrails

The best AI for programming tools includes a feature set that covers secret detection and license awareness. AI code completion allows policy checks for standards like OWASP, SOC2, and HIPAA.

- Why it matters: Software developers need it to prevent credential leaks and license conflicts.

- What good looks like: You have inline secret redaction, SBOM generation, and license allow/deny lists.

- How to evaluate: Assess on-prem or self-hosted options and data residency controls. Review audit logs and model or telemetry controls.

10) Documentation & Knowledge Surfacing

AI supports code documentation generation by creating or updating docs, READMEs, and API usage notes. It answers “how do we…?” questions directly from your wiki.

- Why it matters: AI coding tools help you keep tribal knowledge current and discoverable. Developers use AI code completion to strengthen collaboration across teams.

- What good looks like: You have links back to sources and PR-friendly doc diffs. They also comply with style guides.

- How to evaluate: Monitor the accuracy of citations and alerts for doc drift. Check for multi-format outputs such as Markdown and ADRs.

Pros and Cons of AI Coding Assistants

Using an AI coding assistant brings both pros and cons. AI offers a high level of customization and automation to increase code quality. Yet, developers have to manage the risks.

Pros:

- Faster delivery on routine work: AI code generation helps create Boilerplate, CRUD endpoints, config, and test stubs in seconds. Thus, engineers can focus on logic and review. Features like UI generation capabilities and real-time code suggestions speed up delivery.

- Better test coverage & docs: AI code generation supports unit test generation, docstrings, and READMEs that engineers refine. This way, they raise the definition of done without extra meetings. Static code analysis also improves overall quality.

- Smoother onboarding: New hires can ask the AI coding assistant for codebase context. Then, they get navigable snippets to reduce hand-offs. Contextual code recommendations help them move faster in familiar areas.

- Consistency & style: Code refactoring and lint suggestions align code with team conventions. AI code completion tools help reduce nitpicks in PRs. An AI assistant also applies code smell detection and enforces personalized suggestions based on project patterns.

- Accessibility & learning: “Explain this” and inline examples help juniors and cross-functional teammates contribute safely. With natural language prompting, even non-experts can query the system.

- How to measure the upside: When using AI code completion, developers need to track time-to-first PR and review rework rate. Pay attention to test coverage deltas and bug escape rate, too. Onboarding time before/after a pilot requires equal attention. Improvements in these metrics reveal the real impact of AI coding on your team.

Cons:

- Incorrect/insecure suggestions: An AI programming assistant can hallucinate APIs or miss security checks. Your team should watch for gaps in vulnerability detection.

- License/IP risk: Generated code may resemble restricted-license snippets if you allow broad context. This risk increases with features like cross-language code translation.

- Data exposure & governance: You may send sensitive code or secrets to external AI services. If misconfigured, AI code generation tools may breach policy. Self-hosting and local execution help reduce exposure.

- Over-reliance: Your team may accept the large diffs you don’t fully understand. Your review habits become weaker, especially when you use code review tools too much. A steep learning curve can also contribute to misuse of the AI coding assistant.

- Latency & cost surprises: Big prompts, long chats, or agentic tasks can be slow and exceed budgets. Code completion results vary depending on model choice.

- Tool sprawl & lock-in: Multiple AI coding assistant tools across teams create inconsistent policies and fragmented logs. Be careful when working on cross-repository insights and project-wide code comprehension.

The 10 Best AI Coding Assistant Tools

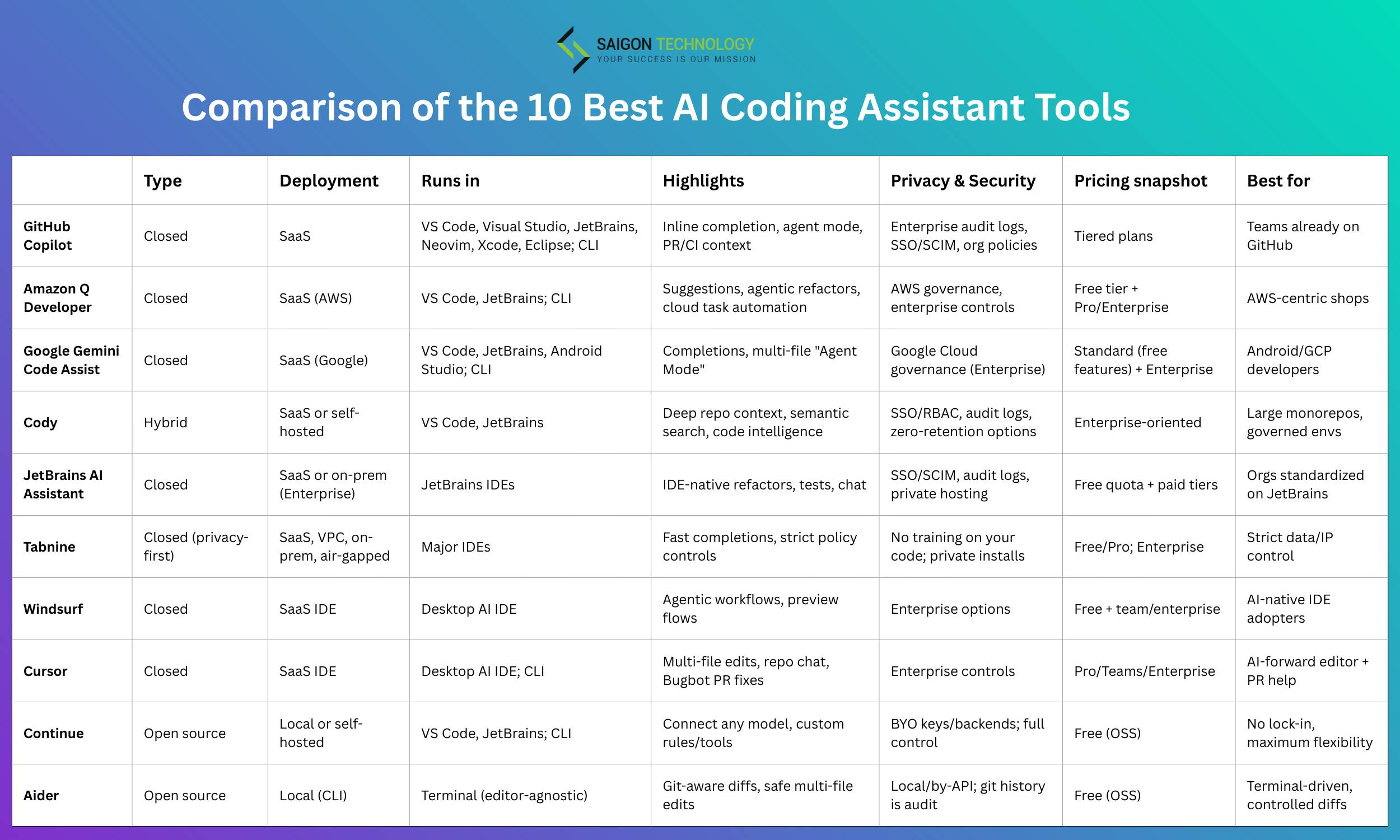

There is no single “best” AI coding assistant. For example, Copilot is best for live coding while ChatGPT and Claude are best for explanations and debugging. Tabnine works best for privacy. If you need large-scale development, consider Cursor. Many developers combine AI coding assistant tools to cover different needs.

Each AI code completion and AI code generation tool has its own standout features, pros, and cons. They come with different pricing (free tier, pro, enterprise) plans. Check out this comparison table before jumping into the details.

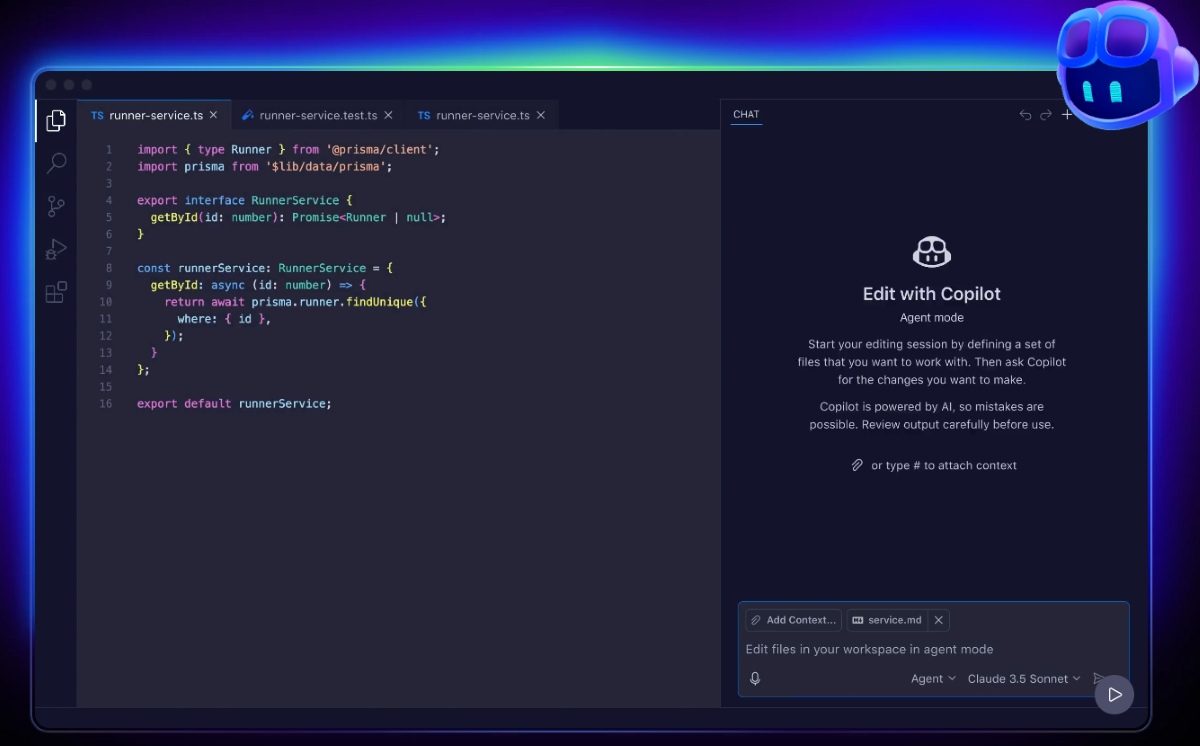

1. GitHub Copilot — Best All-around for Teams Already on GitHub

GitHub Copilot adds AI code generation, chat, and inline suggestions directly to your workflow. This code generation tool integrates with Visual Studio Code, Visual Studio, and JetBrains IDEs. You can also use it in GitHub.com (PRs) and the terminal via Copilot in the CLI.

Standout Features:

- Inline suggestions and Copilot Chat for explanation and quick fixes.

- PR help with summaries, diff questions, and patchable review suggestions.

- CLI support for command explanations and suggestions.

Advantages:

- Strong GitHub repo and PR integration.

- Mature editor experience across IDEs.

- Org controls, audit logs, and repo-level exclusion for governance

- Clear stance. Customer data is not used for training models.

- Helpful for learning and onboarding support through explanations.

Drawbacks:

- SaaS only. No self-hosted Copilot option.

- Advanced admin features tied to GitHub Enterprise Cloud.

- Expect GitHub-first workflows.

Privacy and Security:

- Individuals: by default. GitHub and providers do not use your prompts, suggestions, and code snippets for model training.

- Businesses/Enterprises: Copilot includes auditability and policy controls.

Pricing:

- Individuals: Copilot Pro $10/mo or $100/yr; Pro+ $39/mo or $390/yr; Free tier with limited quotas.

- Organizations: Business $19/user/mo; Enterprise $39/user/mo (GitHub Enterprise Cloud).

Best for: Teams already using GitHub for repos, pull requests, and CI/CD. Users can access AI assistants without changing tools.

2. Amazon Q Developer — Best for AWS-heavy stacks

Amazon Q Developer is AWS’s IDE/CLI assistant and console copilot. Teams use it to build, operate, and modernize apps. The tool works in Visual Studio Code, Visual Studio 2022 (Windows only), JetBrains, and Eclipse. You can also handle the terminal, the AWS Console, Microsoft Teams, Slack, GitHub (preview), and GitLab.

Standout Features:

- Agentic coding / AI coding agent for multiple tasks. Amazon Q Developer helps write/modify files, generate diffs, and run shell commands with live progress.

- Intelligent code suggestions and chat in IDEs and CLI.

- Security and code review with vulnerability scanning and PR help.

- Docs and Q&A features that generate READMEs and answer AWS or code questions.

- Modernization agents. Some examples are Java upgrades (8→17/21), SQL conversion (Oracle→PostgreSQL), and .NET porting from Windows→Linux.

Advantages:

- Deep AWS context in the console for costs, architecture, and incidents. IAM Identity Center adds governance.

- Built-in modernization for Java/.NET with clear summaries and diffs.

- Enterprise posture with auditability and policy controls. The Pro tier keeps proprietary content out of service improvement.

Drawbacks:

- Limited value if you don’t use AWS services.

- Quotas and overages apply to transformation workloads. Pricing is tied to lines of code.

- SaaS extension risk. A July 2025 incident hit the Visual Studio Code extension before AWS patched it.

Pricing:

- Free tier: 50 agentic requests/month; 1,000 lines of code transformation/month.

- Pro: $19/user/month with 4,000 LOC per user/month pooled at the payer account; coverage $0.003 per LOC; adds admin dashboards, Identity Center support, and IP indemnity.

Best for: Teams that already use AI code completion and AI code generation tools on AWS. You may want an IDE-integrated assistant and ready-made agents for Java/.NET upgrades. The tool also assists users with security scanning and a console without stitching together separate tools.

3. Google Gemini Code Assist — Best for Android/Google Cloud

Gemini Code Assist is Google’s AI coding assistant. It brings LLM-powered help into IDEs like Visual Studio Code, JetBrains, and Android Studio. A Gemini CLI adds support for the terminal. Moreover, it connects to Google Cloud developer platforms for a full cloud-based development environment.

Standout Features:

- Completions and AI programming features: code documentation, debugging assistance, and unit-test stubs.

- Agent Mode for multi-step tasks, including plan, propose, and approve, is currently in preview.

- Android strengths include composing UI mockups and Gradle error fixes. You also get Logcat/App Quality Insights analysis.

- Cloud integrations into Firebase, Colab Enterprise, BigQuery data insights, and Cloud Run editor suggestions.

Advantages:

- Strong Google/Android alignment via Android Studio. Wide GCP reach through Firebase, BigQuery, and Cloud Run. All reduce context-switching.

- Enterprise editions include enterprise-grade security and indemnification. The business site highlights the usage of Gemini 2.5 and up to 1M-token context.

- Citations and governance docs explain how long it takes to source and manage code quotes.

Drawbacks:

- SaaS-first delivery. No self-hosted option.

- Agent Mode is still in preview. So, features may shift.

- The reach is limited. It delivers the most value for Android and GCP users.

Pricing:

- Standard: $22.80/user/month (monthly commitment) or $19/user/month (12-month commitment).

- Enterprise: $54/user/month (monthly commitment) or $45/user/month (12-month commitment).

- Individuals: a no-cost version for personal use is available (see Code Assist site).

Best for: Android teams and Google Cloud orgs that want an IDE-native AI coding assistant tool. The bonuses are terminal support and cloud integrations under enterprise-grade controls.

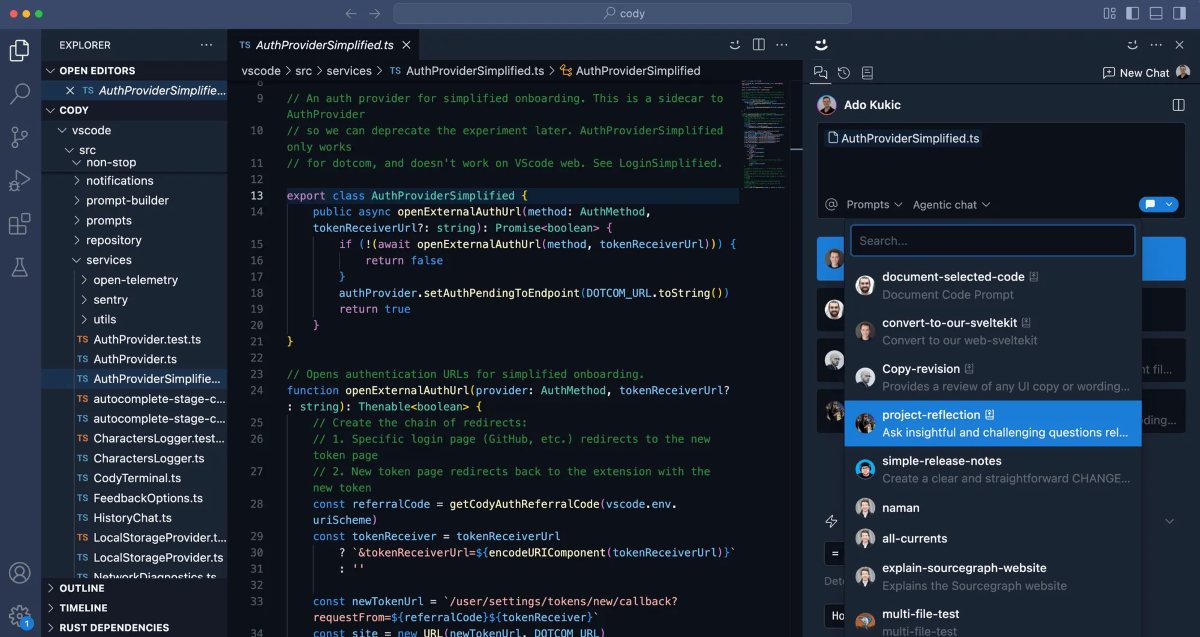

4. Cody – Best for Sourcegraph

Cody is Sourcegraph’s AI pair programmer. It provides AI pair programming with the whole codebase context. You can use it when working on Visual Studio Code, JetBrains IDEs, and Visual Studio (extension). An experimental Neovim client is also available.

Standout Features:

- Deep, multi-repo context via Sourcegraph’s search/graph and RAG. Answers point to the right files across large codebases.

- Autocomplete, auto-edit, chat, and a prompt library for code refactoring, tests, and docs. Admins can control which LLMs to use.

- Flexible model choices, including Anthropic, Google, and OpenAI. All come with enterprise governance.

Advantages:

- Pairs with Sourcegraph Code Search to support very large repos and multiple hosts.

- Enterprise deployment options, such as self-hosted or single-tenant cloud, SSO, audit logs, and documented retention practices.

- Strong security posture. No retention or model training on customer code for enterprise plans.

Drawbacks:

- Enterprise-only since Code Free/Pro ended in July 2025.

- Best results come when paired with Sourcegraph indexing. It’s heavier than a simpler SaaS plugin.

Pricing: Only Enterprise plans. Pricing is not public. Contact Sourcegraph sales for details.

Best for: Large engineering orgs with monorepos or multi-repo setups. Ideal for teams that need AI for coding with governed LLM support, SSO, and audit logs inside mainstream IDEs.

5. JetBrains AI Assistant — Best inside JetBrains IDEs

JetBrains AI Assistant is the vendor’s native AI programming assistant. It mainly serves IntelliJ-platform IDEs (IntelliJ IDEA, PyCharm, WebStorm, GoLand, CLion, etc.). Installed as a plugin, this code completion and code generation tool works inside the editor UI. The autonomous coding agent Junie comes with the subscription and is available in select IDEs.

Standout Features:

- Context-aware suggestions in the editor. The tool can explain code, generate/complete snippets, draft docs, and write commit messages.

- Agentic automation to help plan steps and edit files. You can also use it as an AI code review tool to run tests/commands and verify results.

- Local model support / offline mode for some tasks. The Enterprise plan can route to OpenAI, Azure OpenAI, Vertex AI, Bedrock, or Hugging Face.

Advantages:

- Tight, native integration across JetBrains IDEs (IntelliJ, PyCharm, WebStorm, GoLand, CLion, Rider…), with refactors, inspections, and project-aware navigation.

- Numerous Enterprise controls, such as on-prem/air-gapped deployments and provider selection. You also get request/response logging to your object storage for audit.

- Quota transparency and top-ups. Subscriptions map dollars to AI Credits with clear consumption and optional top-ups.

Drawbacks:

- Feature availability varies. Junie is limited to some IDEs. Plus, certain features/tiers are region-specific.

- Heavy agent or chat use can burn credits quickly. Larger models consume more. Hence, quota management would be tricky.

- Full self-hosted governance requires Enterprise tiers.

Privacy, security & governance:

- Centralized auth (OAuth/SAML) via IDE Services/Hub.

- Granular data-collection controls per profile.

- Auditable logs of AI requests/responses, including code snippets, with storage in your environment.

Pricing:

- AI Free: small monthly cloud credit; unlimited local code completion support (where available).

- AI Pro: $10/user/month (or annual equivalent); 10 AI Credits per 30 days.

- AI Ultimate: $30/user/month; 35 AI Credits per 30 days (bonus credits under the newer quota model).

- AI Enterprise: self-host/VPC and provider routing via IDE Services; contact JetBrains for org pricing.

Best for: Teams standardized on JetBrains IDEs. Ideal for users who want the most seamless automated test generation. This IDE-native AI coding tool can also grow with your needs, as long as you choose Enterprise controls.

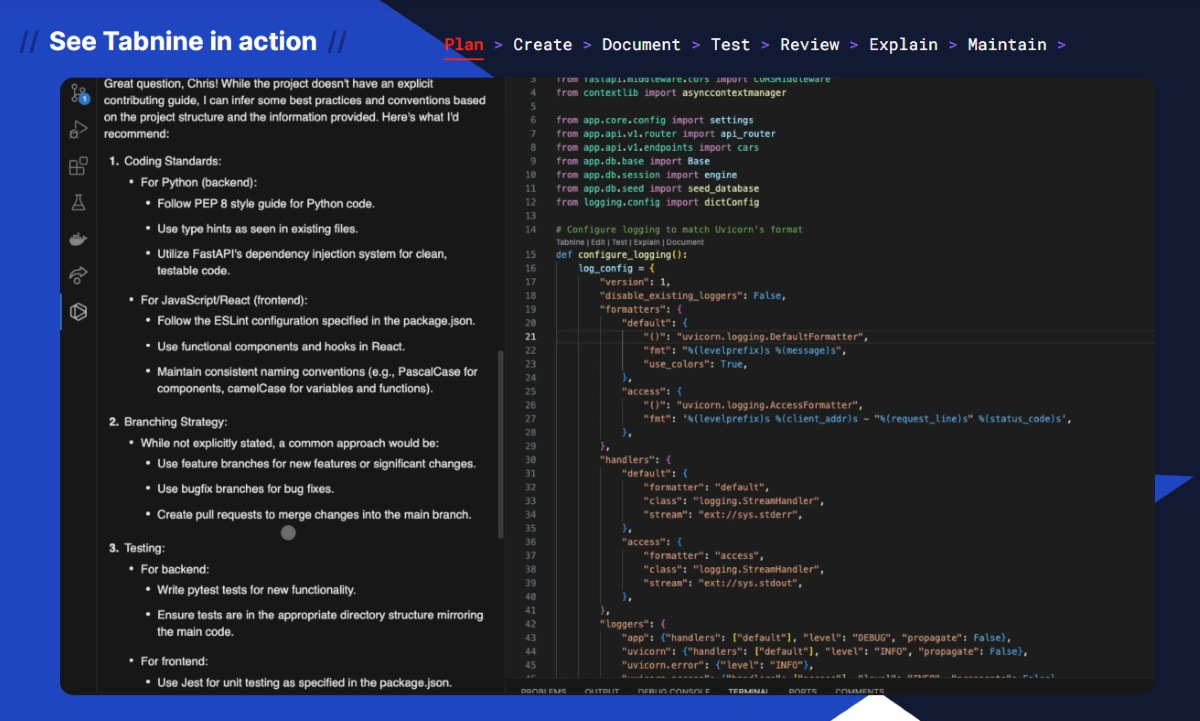

6. Tabnine — Best for privacy-first and self-hosted control

Tabnine is an AI code generator designed for strict privacy and deployment flexibility. The tool works as a plugin in all major IDEs, like VS Code, JetBrains, Eclipse, and Visual Studio. It also supports Neovim to assist teams familiar with a visual editor. Another plus is that you enjoy collaboration features for a productivity boost.

Standout Features:

- Fast completions and chat for explanations, tests, and refactors. All these AI code completion, AI code generation, and AI code review features focus on code quality improvement.

- Enterprise agents like test-case generation, code review, and Jira tasks. There is also an Advanced Context Engine for GitHub, GitLab, Bitbucket, and Perforce for improved code completion and code generation.

- Flexible models to choose from. You can select Tabnine’s protected models or connect third-party/OSS models via private endpoints.

Advantages:

- Privacy first, “no-train, no-retain.” Your code isn’t used for training or stored once you use Tabnine’s protected models.

- Various self-hosting options. Full deployment control with SaaS, VPC, on-prem, or even fully air-gapped environments.

- Enterprise governance with SAML SSO, SCIM/IdP sync, roles, and admin controls.

Drawbacks:

- SaaS-limited for Free/Dev users. Enterprise plans unlock the richest features and private deployment.

- Requirement for platform/DevOps effort when setting self-hosted (Kubernetes, networking, logging).

Privacy and Security:

- Self-hosted clusters keep code in your environment.

- Air-gapped installs route metrics/logs to your own Prometheus/log aggregator.

- No code or PII is sent to Tabnine from an air-gapped environment.

- Self-hosted shares only operational metrics when enabled.

Pricing:

- Dev: $9/user/month (single-user) with a 14-day free preview.

- Enterprise: $39/user/month (annual) with private deployment options (SaaS or self-hosted) and advanced agents/administration.

Best for: Teams that seek the best AI for programming when it comes to privacy. You will get VPC/on-prem/air-gapped options and enterprise-grade governance. The best thing is that you don’t have to give up modern IDE coverage.

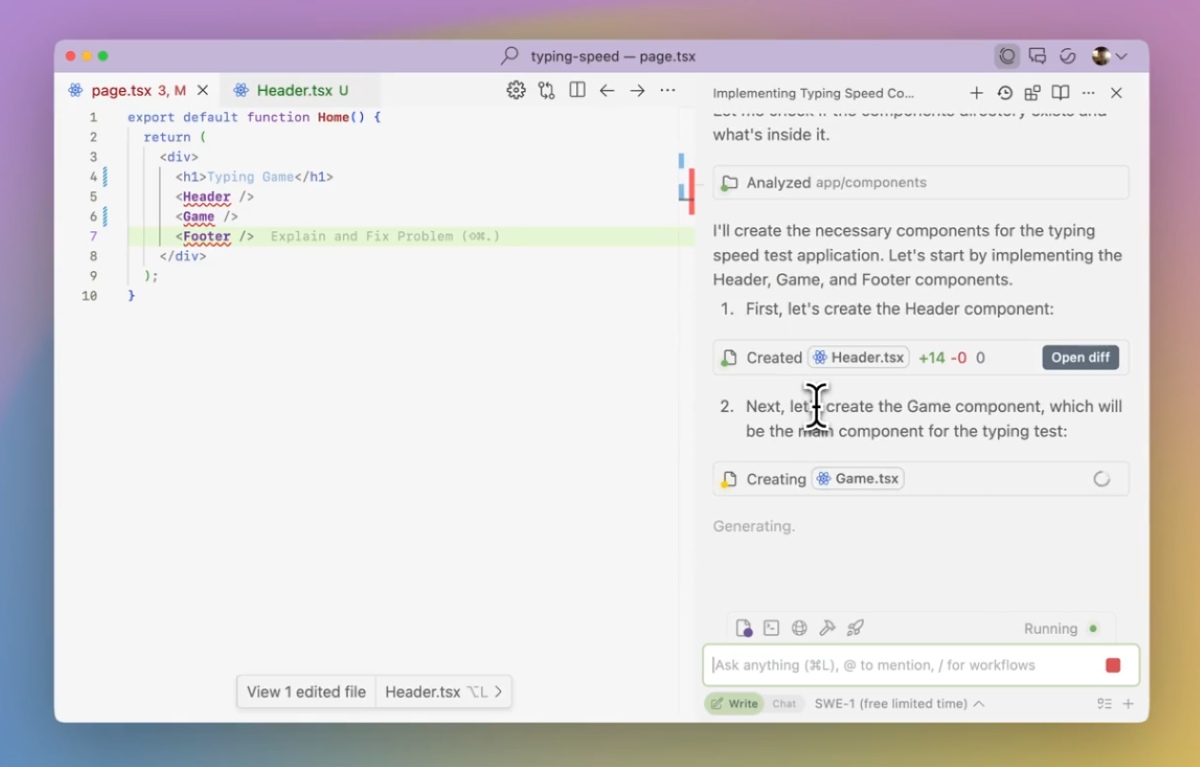

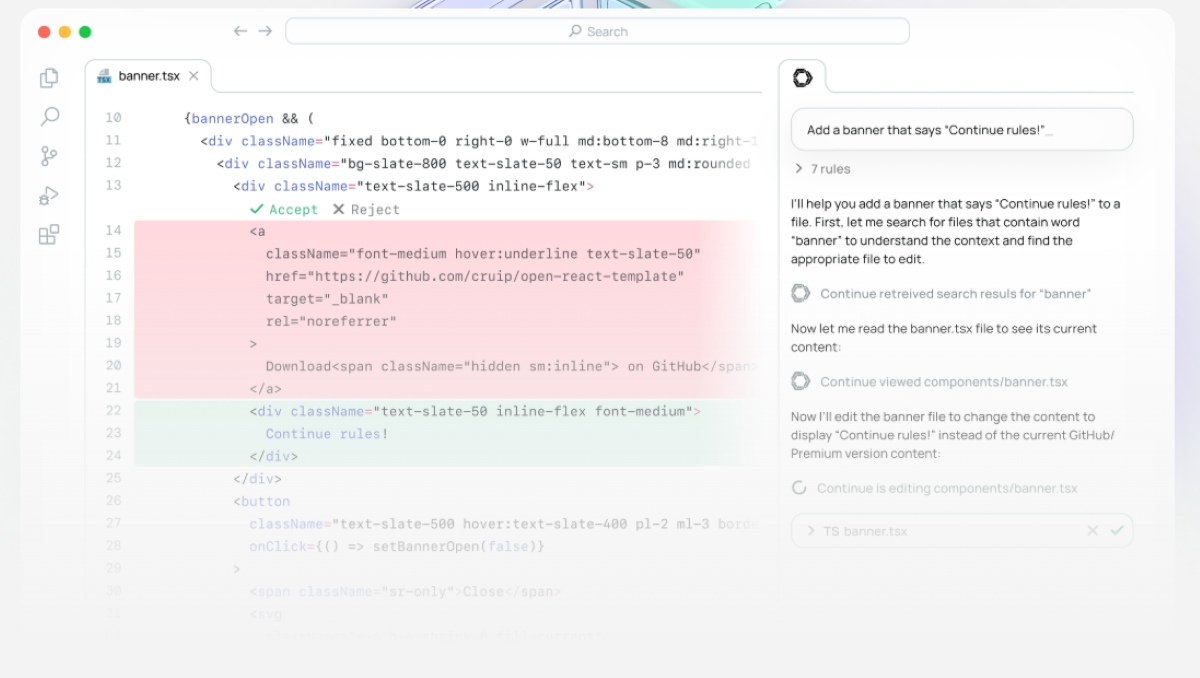

7. Windsurf (Codeium) — Best AI-native IDE experience

Windsurf is an AI coding assistant built as a standalone IDE for macOS, Windows, and Linux. Its Cascade agent powers multi-step edits, repo-wide reasoning, and natural language prompts. Plugins for other IDEs exist. Yet, the full experience of code completion, code generation, and code review is in the Windsurf Editor.

Standout Features:

- Cascade agent for multi-step edits, intent tracking (edits, terminal, clipboard), and in-the-loop approvals.

- Previews and one-click deployment are directly inside the IDE for viewing and shipping web apps without context switching.

- Inline assist with Tab/Supercomplete, inline refactor commands, and terminal commands.

- MCP/plugin store to connect external tools (Slack, Stripe, Figma, DBs) and enforce team rules.

- PR reviews (beta) that add automated comments on GitHub pull requests.

Advantages:

- AI-first workflow. Fewer needs to switch contexts because build, preview, and deploy stay in one place.

- Strong governance options, such as cloud (zero-data-retention default), Hybrid (customer-managed data plane), or full Self-hosted with BYO models.

- Numerous enterprise features like SSO, RBAC, audit logs, and compliance (regional hosting, EU, and FedRAMP High).

Drawbacks:

- Full functionality requires Windsurf Editor. Plugins in Visual Studio Code/JetBrains only cover autocomplete.

- Some external integrations don’t support zero retention. You need explicit admin approval.

- Self-hosted deployments miss some of the most advanced Cascade/Editor features and need more ops work.

Privacy and Security:

- Zero-data-retention is the default on Teams/Enterprise. Individuals can opt in.

- Attribution filtering and audit logs are available on enterprise deployments.

- Regional options include EU and FedRAMP High (via AWS GovCloud/Palantir).

Pricing:

- Free: $0/user/month — 25 prompt credits/mo, optional zero-retention, limited deploys.

- Pro: $15/user/month — 500 credits/mo, add-ons $10/250 credits, SWE-1 currently promotional at 0 credits, 5 deploys/day.

- Teams: $30/user/month — 500 credits/user/mo, add-ons $40/1,000, Windsurf Reviews, centralized billing/analytics, automated zero-retention, SSO +$10/user/mo.

- Enterprise: from $60/user/month — 1,000 credits/user/mo, RBAC, SSO, volume discounts >200 seats, and a Hybrid deployment option.

Best for: Teams that need AI coding assistant tools with agentic workflows and enterprise-grade privacy. The tool also comes with multi-language support. It’s the best AI for coding, especially useful when dealing with web apps, rapid iteration, and governed org rollouts.

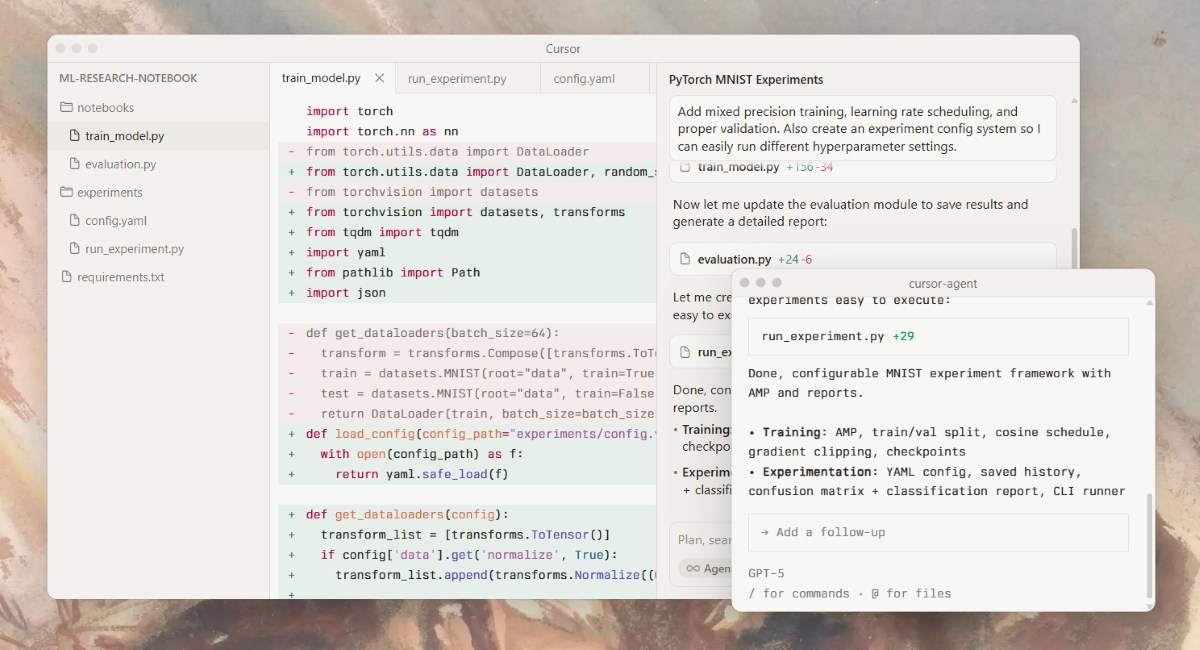

8. Cursor — Best for rapid iteration in an AI code editor

Cursor is a desktop code-writing AI editor with an integrated agent and a CLI for terminal-first workflows. As an AI code completion, code generation, and code review tool, it enables quick multi-file edits and CI-driven workflow automation. If you need the best AI for programming, Cursor stands out for its editor-first design.

Standout Features:

- Agent mode for end-to-end tasks, including invoke, review, and apply.

- Multi-line/ multi-file edits, smart rewrites, and tab-through suggestions that speed up refactors.

- Bugbot add-on for code review automation. You get AI-driven PR reviews with monthly caps.

- CLI agent to script edits and connect with MCP tools. Cursor also supports AGENTS.md and rules configs.

Advantages:

- Fast loop from prompt and preview diff to apply without switching tools.

- Enterprise readiness with privacy and security (SOC 2, on-premise, VPC) options. Other perks include zero-data-retention mode and published hosting/data-flow docs.

- Handles very large codebases and big teams (tens of millions of LOC reported).

Drawbacks:

- SaaS-first model. No full-on-prem edition advertises. Data security depends on privacy mode and approved hosts.

- Bugbot is a paid add-on, separate from editor plans.

Pricing:

- Pro (editor): usage-based plan that includes $20/month of model usage (at provider API rates); unlimited “Auto” routing; overages available at cost. (Cursor’s June 2025 pricing update.)

- Ultra (editor): $200/month with ~20× Pro usage for power users.

- Teams (business): $40/user/month with org controls (Privacy Mode enforcement, SSO, RBAC, analytics). Enterprise: custom.

- Bugbot add-on (AI PR reviews): $40/month (Pro) with unlimited reviews on up to 200 PRs/month; Teams: $40/user/month with pooled usage; Enterprise custom.

Best for: Teams that need AI code generation and AI code review tools, rapid iteration, automated multi-file diffs, and optional PR review automation. Strong fit for enterprises that need privacy controls.

9. Continue (open source) — Best for “no lock-in” customization

Continue is an open-source AI coding assistant. It runs in Visual Studio Code and JetBrains with a companion CLI for terminal workflows. Developers use this code completion and code generation tool to build and run custom agents across their IDE, terminal, and CI. The best thing is that you can work with your own rules/tools (including MCP) and favorite models (local or cloud). Plus, it allows you to keep data local and avoid vendor lock-in. The project is Apache-2.0 licensed.

Standout Features:

- Agent, Chat, and Edit workflows for multi-file changes, explanations, and quick refactors.

- Model flexibility as you use your own API keys (OpenAI or Anthropic) or run local models with Ollama, Llama.cpp, or LM Studio.

- MCP tools integration to connect external systems like databases and services.

- Hub and source-control flow to version agents/blocks and sync them with teams via GitHub Actions.

Advantages:

- Zero lock-in with open-source extensions, CLI, and your own infra. No vendor usage caps.

- Team governance with allow/block lists, managed proxies, and secrets management.

- Offline and self-reliant. Guides run without internet, and self-hosting models keep everything local.

Drawbacks:

- Not a one-click SaaS. Users need to deal with DIY setup and ops. The tool also requires platform work for model routing, policies, and tooling.

- Feature maturity varies by provider. MCP tool support has limits depending on the model.

Pricing:

- Solo: $0/ 0/developer/month — open-source extensions + CLI; BYO compute and model/API keys. Models add-on: +$20/mo for access to multiple frontier models via Continue.

- Team: $10/developer/month — central management/governance (allow/block lists, managed proxy for secrets). Models add-on: +$20/mo, optional.

- Enterprise: contact sales (adds enterprise controls and deployment options via Continue Hub).

Best for: Platform-minded teams that want code completion and code review tools with customization, vendor independence, and governance. It will also work well if you need the best AI for code deployment across the terminal and IDEs.

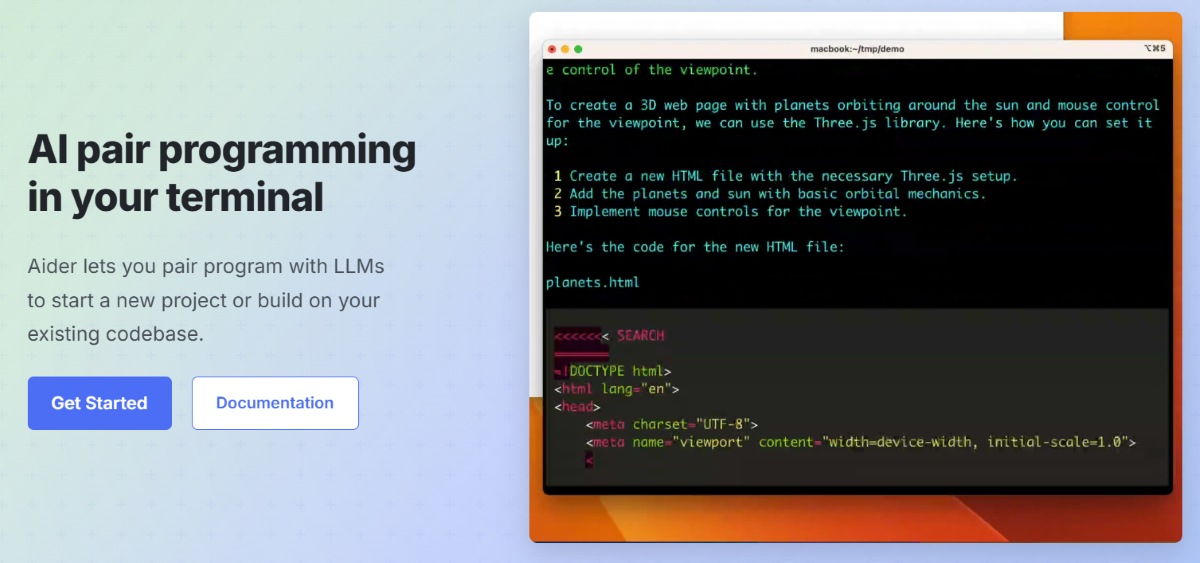

10. Aider (open source CLI) — Best for terminal-driven refactors

Aider is another standout example when comparing open-source vs closed-source tools. This command-line AI code completion tool edits code through Git-tracked diffs. You work on code completion and code generation inside your repo, add the files to consider, and describe the change. Aider then proposes, applies, and commits patches. It’s model-agnostic and keeps you in a tight code review loop. Aider is one of the best AIs for code for terminal-driven workflows.

Standout Features:

- Git-first workflow, as every change lands as a readable commit. You can review, revert, and bisect it.

- Scoped context, useful on large codebases. You explicitly “/add” the files or glob patterns Aider can touch.

- Multi-file refactors that keep tests/docs in sync. You can rename symbols, extract functions/modules, and update imports.

- Promptable diffs. Natural-language tasks yield patch sets.

- Scriptable CLI. Aider works in terminal sessions, shell scripts, and CI steps for supervised migrations.

- Flexible models, including OpenAI, Anthropic, Google, DeepSeek, or a local gateway.

Advantages:

- Safety and traceability. Commit-by-commit history creates audit trails and speeds code reviews.

- Speed up maintenance work. Ideal for repetitive upgrades, such as API changes, typing, and lint/style cleanups.

- No IDE dependency. The tool plays nicely with editors, test runners, linters, and hooks.

- Open source and control over the cost. You pay only for the chosen model and constrain the context to save tokens.

Drawbacks:

- No built-in model. You must configure providers or a local server.

- Terminal skill required. Best for developers comfortable with Git, shells, and reviewing diffs.

- Not a full IDE. The tool lacks rich inline UI, live debugging, and project indexing typical of IDE assistants.

- Prompt hygiene matters. Ambiguous requests can produce noisy diffs. So, be precise and keep scopes small.

Pricing:

- Open Source License: Aider is distributed under a permissive license. You can download, use, and even modify the tool without charge.

- No Subscription Fees: There are no recurring monthly or annual plans for using Aider itself.

- Bring Your Own API Key: To access AI features, link Aider to an LLM provider, like OpenAI’s GPT-4 or Anthropic’s Claude. You pay the provider directly based on their usage-based pricing.

Best for: Teams and engineers who work in the terminal. They need AI code completion and code review tools that value transparent and reviewable refactors. You also get fine-grained control over models, costs, and commit history. Ideal for migration sprints, polyglot repos, and CI pipelines that need human-in-the-loop transformations.

How I Selected the Best AI Coding Tools in this List

1) Test design (apples-to-apples)

First, I ran all AI coding assistant tools through the same setup. The tasks also include code generation, code completion, and code review:

- Same repos and tasks: One backend service, one frontend app, one data/ML notebook.

- Tasks included: Small bug fix, CRUD endpoint, refactor, docstring pass, and unit-test scaffold.

- Same workflow: Prompt → preview diff → run tests/linters → open PR → review.

- Same guardrails: Secrets scanning on, license checks on, content-exclusion rules, and human approval required.

2) Scoring rubric (0–100 total)

Next, I scored each coding assistant based on clear criteria as follows. Each category includes task-level checks (e.g., % suggestions accepted, test pass rate) and qualitative notes (e.g., diff readability).

- Capabilities (30%) – quality of completions/edits, refactors, test generation, review suggestions.

- Privacy & Security (20%) – data retention controls, model-training policy, SSO/RBAC, audit logs.

- Developer Experience (20%) – latency, stability, UI clarity, accessibility, learning curve.

- Integrations (10%) – IDE coverage, PR bot quality, CI hooks, cloud/devtools fit.

- Cost & Licensing (10%) – clarity, predictability at team scale, free tier usefulness.

- Admin & Governance (10%) – policy enforcement, analytics, keys/secrets handling.

3) Metrics we actually measured

Beyond the scores, I tracked real-world results of the AI code generation and AI code completion tools using these metrics:

- Time-to-first-PR and median time to merge

- Review rework rate (requested changes per PR)

- Test coverage delta on changed files

- Defects/1k LOC found in CI post-change

- Acceptance rate of AI-suggested diffs

4) Environment & controls

Environment and controls are important for choosing the best AI coding assistant and code review tools. I conducted tests under controlled conditions. Here is what I did:

- Testing on macOS/Windows/Linux with current versions of the listed IDEs/editors, where applicable.

- Using vendor default unless enterprise toggles were recommended.

- No production data. Repos used public or synthetic code with fake “secrets” to test scanners.

5) Fairness & limitations

I also made sure to note where this approach had limits. Some things I noticed are:

- You should never benchmark raw model accuracy in isolation. Instead, score the end-to-end developer experience when using AI code generation and AI code completion tools.

- Features, velocity, and pricing change frequently. Each entry has a “verify before rollout” note.

6) Update cadence

Finally, I focused on updating cadence when considering the best AI tool for coding. So, I keep revisiting reviews on a schedule or when major releases land. Significant changes to their code completion, code generation, and code review features adjust scores and recommendations.

Real-World Experiences and Use Cases

Teams see real benefits when using AI for coding. The biggest improvements come on routine tasks like boilerplate, CRUD endpoints, docstrings, and unit-test scaffolds. Thus, engineers can focus more on design and code review. Moreover, AI code generation and AI code completion tools understand the repos and handle context management. Engineers then don’t have to waste a long time asking “where is this used?”.

Representative use cases:

- Onboarding: The coding assistant answers code-navigation questions, drafts interface stubs, and proposes tests through code generation and code completion. Users can track time-to-first-PR and review cycles to measure effectiveness.

- Modernization (Java/.NET): The agent proposes batched diffs with migration notes. Users monitor hours spent per 100 files and post-upgrade incidents.

- PR review acceleration: The bot leaves patchable comments and flags security issues. Then, engineers track median time to merge and rework rates.

Process that works:

- Keep changes small and reviewable (function-level diffs).

- Run tests on AI-touched code and linters/CI before merge.

- Access the scope (least-privilege repos; exclude sensitive folders).

- Conduct audit logs and license checks.

- Maintain a shared prompt/policy library with good/bad examples.

Pilot in two weeks:

- Choose one repo and 5–10 repeatable tasks.

- Turn on secret scanning, license checks, and logging.

- Set KPIs for 2 weeks. Then, measure time-to-PR, rework, coverage delta, and escaped bugs.

- Enforce small diffs, tests, and human approval.

- Run a 10-minute daily retro to refine prompts/policies.

- Scale, limit, or stop based on metrics, not anecdotes.

Conclusion

The best AI for coding combines real-time suggestions that assist with your coding. Such tools aim to help both new hires and experienced developers. The right AI coding assistant should balance features, security, and adoption. Consider your needs when choosing your asset for modern software development. Want to possess the power of AI? Contact Saigon Technology now. Our AI experts will guide your project to success!

FAQs

1. What are the Differences between Closed-Source vs. Open-Source AI Coding Assistants?

When choosing an AI coding assistant, you may consider closed-source vs. open-source solutions. So, what is the difference?

Closed-source assistants use proprietary models and plugins from a vendor. You access them through an IDE or cloud integrations under a commercial license.

On the other hand, open-source assistants are stacks built from open models and tools (model + retrieval + IDE plugin). You can host and modify them under an OSS license.

Here are the differences between these two AI code generation and code review options:

- Visibility & control: Closed tools like GitHub Copilot and Codeium give limited internals but strong guardrails. Meanwhile, open-source tools give full code access and tunability.

- Deployment: Closed assistants are faster to pilot in an enterprise network. Open assistants often support a self-hosted model. On-prem/private VPC is straightforward and requires ops.

- Data handling: Closed-source models rely on vendor support and policies/DPAs. On the other hand, you define your own data flow and retention rules when working with open-source models.

- Customization: Closed-source assistants are quite limited to configs and prompts. On the contrary, open-source ones offer deep customization, including fine-tuning, plugins, and policies. OpenAI Codex, OpenHands, and Bolt.Diy will impress you with its customization features.

- Compliance: Closed-source tools come with built-in audits, SSO/RBAC, and logs. Meanwhile, when using open-source tools like Aider and Continue, you must own compliance controls and evidence.

- Cost model: Closed-source AI code generation solutions usually have usage-based pricing or per-seat licenses. When it comes to open-source options, you have to consider infrastructure and ops costs. The per-unit cost can be lower at scale. The initial setup demands more effort.

- Performance: Closed source often ships with mature evals/UX. But open source varies by model and tuning quality.

- Ecosystem: Closed-source assistants provide polished IDE/PR integrations. Meanwhile, open-source ones have a wide community but uneven stability.

2. Is ChatGPT Best for Coding?

ChatGPT stands out as an excellent code generation choice for explaining, debugging, and exploring coding ideas. However, this tool is not always the top solution for real-time code completion. Many developers combine ChatGPT with an in-IDE tool and other code review tools for the best results.

3. Who Can Use an AI Coding Assistant?

AI coding assistants are designed for a wide range of users, not just professional developers.

- Beginners and Students: Step-by-step explanations from AI code generation tools help them learn syntax and practice coding more easily. Real-time feedback is another big plus.

- Professional Developers: They use the AI coding assistant to speed up repetitive tasks and generate boilerplate code. Debugging complex projects is simpler with AI support.

- Teams and Companies: Engineering teams adopt them to improve productivity. AI also helps maintain code quality with code review features and streamlines workflows across large projects.

- Non-technical Users: Even product managers, analysts, or hobbyists with limited coding experience can use AI tools. These assistants help them prototype, automate small tasks, or understand code snippets.

4. What Are Common Use Cases for Coding Assistant Tools?

AI coding assistant tools help developers save time. Code review reduces errors throughout the software lifecycle. The most common use cases include:

- Code Generation: Users can write boilerplate code, functions, and standard patterns faster.

- Debugging and Error Fixing: AI assistants explain error messages and suggest corrections.

- Learning and Explanations: Developers can break down complex code into plain language. AI code completion also shows examples in different languages.

- Testing Support: You can generate unit tests, integration tests, and edge case scenarios.

- Refactoring and Optimization: Readability improves with AI. Performance and maintainability of existing code get a boost as well.

- Documentation: AI assistants write comments, docstrings, and summaries to keep codebases clear.