Introduction to Performance Testing

Performance test is a crucial part of software development, ensuring that applications can handle high traffic, sustained usage, and unexpected stress conditions. By simulating real-world user loads and extreme conditions, performance testing helps identify bottlenecks, performance degradation, resource exhaustion, and system failures before deployment.

This article explores different types of performance test, including:

✅ Breakpoint Testing – Identifying the system’s breaking point under extreme load.

✅ Endurance Testing – Evaluating system stability over prolonged periods.

✅ Load Testing – Measuring system performance under expected traffic conditions.

✅ Peak Testing – Assessing performance at maximum user load.

✅ Stress Testing – Pushing the system beyond its limits.

✅ Spike Testing – Measuring how a system handles sudden traffic surges.

✅ Soak Testing – Testing system behavior under sustained load.

✅ Scalability Testing – Ensuring the system can scale dynamically.

✅ Volume Testing – Evaluating database efficiency and query performance.

✅ Resilience Testing – Testing system recovery after failures.

Breakpoint Testing: Identifying System Limits and Performance Bottlenecks

What is Breakpoint Testing?

Breakpoint testing is a specialized performance test designed to determine the maximum number of users, transactions, or system requests a software application can handle before performance degradation, server crashes, or resource exhaustion occur.

By progressively increasing the system load under heavy load conditions, breakpoint testing helps organizations identify bottlenecks, optimize failover mechanisms, and strengthen system resilience before deployment.

Key Objectives of Breakpoint Testing

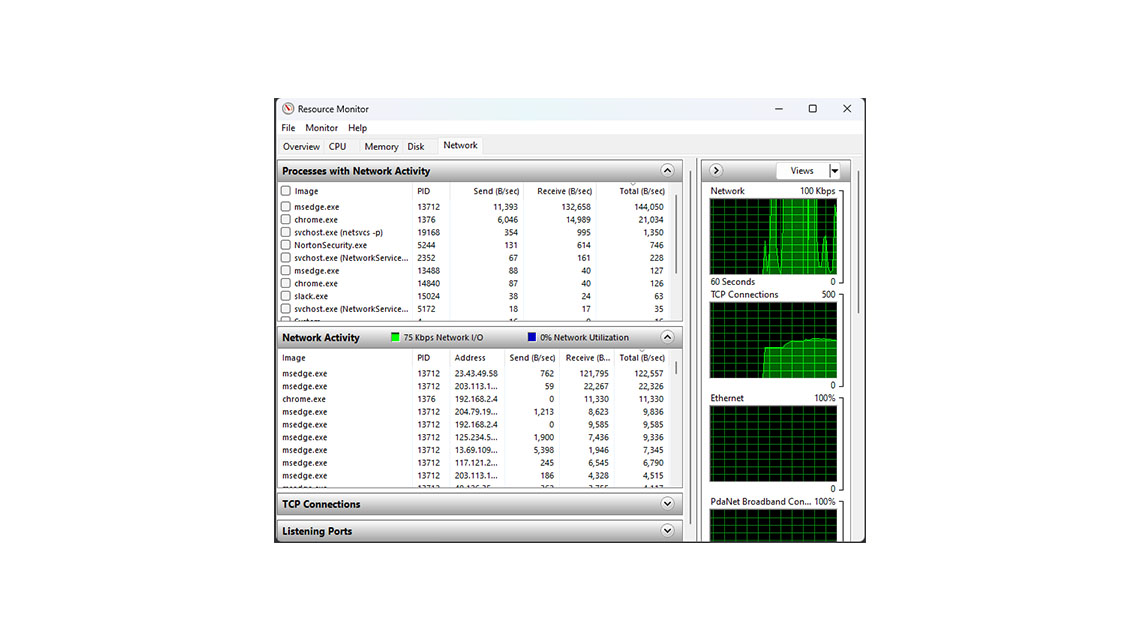

✔ Detect bottlenecks – Identifies components (e.g., databases, servers, or APIs) that cause slowdowns or failures.

✔ Measure the maximum number of users – Determines the highest concurrent user capacity before system breakdown.

✔ Assess failover mechanisms – Ensures automatic recovery solutions activate when failures occur.

✔ Analyze performance degradation – Observes when response times, transactions, and throughput begin to drop.

✔ Evaluate resource exhaustion – Tests CPU, memory, disk usage, and network bandwidth limits under stress.

How Breakpoint Testing Works

1️⃣ Define Load Parameters – Establish the baseline system performance and expected user load.

2️⃣ Gradually Increase Concurrent Users – Simulate increasing real-world traffic to find the breaking point.

3️⃣ Monitor System Behavior – Track database outages, network failures, and server crashes.

4️⃣ Identify Performance Thresholds – Detect when transactions slow down or fail due to overload.

5️⃣ Optimize System Recovery – Strengthen failover mechanisms and resource allocation to prevent breakdowns.

Common Breakpoints in Software Systems

|

Failure Type |

Cause |

Impact |

|

Server Crashes |

CPU overload, memory leaks |

System downtime |

|

Database Outages |

Query overload, deadlocks, poor indexing |

Transaction failures |

|

Network Failures |

High bandwidth consumption, latency issues |

User disconnections |

|

Resource Exhaustion |

Excessive concurrent users, inefficient scaling |

Application slowdown |

Real-World Example: E-Commerce Platform Testing

? Scenario: A large e-commerce website prepares for a Black Friday sale.

? Breakpoint Testing Goal: Determine if the website can support 500,000 concurrent users making transactions.

? Findings: At 350,000 users, performance degradation occurs, and database outages start happening.

? Solution: Engineers optimize failover mechanisms and implement load balancing, increasing the breakpoint to 600,000 users.

Best Practices for Effective Breakpoint Testing

✔ Use Load Testing Tools – Employ tools like Apache JMeter, LoadRunner, and Gatling to simulate high traffic.

✔ Monitor Key Metrics – Track response times, CPU and memory usage, and transaction success rates.

✔ Automate Breakpoint Tests – Use cloud-based performance testing solutions to create real-world simulations.

✔ Conduct Regular Testing – Perform breakpoint testing before major events, software releases, or infrastructure upgrades.

✔ Optimize Resource Allocation – Adjust autoscaling, caching mechanisms, and database indexing to improve capacity.

Final Thoughts on Breakpoint Testing

Breakpoint testing is essential for software stability, ensuring applications can handle maximum load conditions without unexpected crashes or failures. By identifying bottlenecks, database outages, and network failures, organizations can implement failover mechanisms and performance optimizations to enhance system resilience.

Endurance Testing: Evaluating System Performance Over Extended Periods

What is Endurance Testing?

Endurance testing, also known as soak testing, is a crucial performance test method that assesses how a system behaves under prolonged usage. Unlike short-term load tests, endurance testing is designed to detect gradual performance degradation, memory leaks, and resource exhaustion over extended periods.

This type of testing ensures that applications remain stable, resilient, and responsive after hours, days, or even weeks of continuous operation.

Key Objectives of Endurance Testing

✔ Detect Memory Leaks – Identifies inefficient memory usage that could cause system crashes over time.

✔ Assess CPU Usage and Resource Utilization – Monitors how long-running transactions impact CPU, disk I/O, and network bandwidth.

✔ Ensure System Stability and Resilience – Verifies that the system maintains error-free operation under prolonged usage.

✔ Measure Throughput and Transaction Rates – Evaluates if the system can process requests efficiently without slowing down.

✔ Monitor Data Integrity – Ensures that extended use does not corrupt or lose critical data.

How Endurance Testing Works

1️⃣ Define Testing Parameters – Set up test conditions, including expected traffic, transaction rates, and system workload.

2️⃣ Run Prolonged Load Tests – Simulate continuous system usage over an extended period (e.g., 24 hours to several weeks).

3️⃣ Track Key Performance Indicators (KPIs) – Monitor response times, resource utilization, error rates, and throughput.

4️⃣ Identify Performance Degradation – Look for signs of slower response times, increased CPU usage, or declining throughput.

5️⃣ Analyze System Logs and Error Reports – Detect memory leaks, data corruption, and unexpected failures.

Common Issues Detected During Endurance Testing

|

Issue Type |

Cause |

Impact |

|

Memory Leaks |

Unreleased memory consumption |

System crashes, slowdowns |

|

CPU Overload |

Excessive processing over time |

Performance degradation |

|

Data Integrity Issues |

Long-term data transactions errors |

Corrupt or lost data |

|

Increasing Error Rates |

Resource depletion |

Transaction failures |

|

Declining Throughput |

Resource exhaustion |

Reduced system efficiency |

Real-World Example: Banking System Endurance Testing

? Scenario: A global banking system undergoes endurance testing to validate its ability to process continuous transactions.

? Test Goal: Ensure that the system can handle millions of transactions daily for weeks without downtime.

? Findings: After 72 hours, error rates increased, and memory leaks caused a 40% slowdown in response times.

? Solution: Developers optimized garbage collection, memory allocation, and database indexing, improving system resilience.

Best Practices for Effective Endurance Testing

✔ Use Performance Monitoring Tools – Employ tools like Apache JMeter, LoadRunner, and New Relic to track CPU usage, response times, and system resilience.

✔ Automate Test Execution – Utilize continuous testing frameworks to simulate real-world prolonged usage scenarios.

✔ Analyze System Logs – Regularly inspect error reports, transaction failures, and throughput patterns.

✔ Test Under Different Load Conditions – Vary transaction rates, resource consumption, and concurrent user levels to detect hidden bottlenecks.

✔ Ensure Data Integrity – Verify that long-term data processing does not result in corruption or inconsistencies.

Final Thoughts on Endurance Testing

Endurance testing is essential for applications that require high availability, long-term stability, and uninterrupted performance test. By identifying memory leaks, performance degradation, and resource utilization inefficiencies, endurance testing ensures that applications remain efficient, stable, and resilient under prolonged usage.

Load Testing: Evaluating System Performance Under High Traffic

What is Load Testing?

Load testing is a crucial performance testing method that assesses how a system performs under expected and peak traffic conditions. It simulates real-world concurrent users and transactions to identify bottlenecks, response time delays, CPU and memory utilization issues, and error rates.

By conducting cloud-based performance testing, organizations can ensure that their applications remain scalable, stable, and responsive under high-traffic scenarios.

Key Objectives of Load Testing

✔ Identify Performance Bottlenecks – Detects CPU and memory utilization spikes, slow queries, and inefficient processes.

✔ Measure Response Time and Throughput – Tracks how quickly the system processes user requests under high load.

✔ Assess Concurrent User Handling – Determines how many virtual users the system can accommodate without performance degradation.

✔ Capacity Planning – Helps organizations plan infrastructure scaling based on expected traffic.

✔ Reduce Error Rates – Ensures that transactions are processed correctly without timeouts or failures.

How Load Testing Works

1️⃣ Define Load Scenarios – Establish expected user behavior, virtual users, and transaction patterns.

2️⃣ Simulate Traffic with Load Testing Tools – Use tools like Apache JMeter, LoadRunner, and Gatling to generate user requests.

3️⃣ Monitor System Metrics – Track CPU and memory utilization, response time, and error rates.

4️⃣ Analyze Performance Data – Identify throughput limits and areas requiring optimization.

5️⃣ Optimize System Performance – Implement cloud-based performance testing solutions to improve scalability.

Common Performance Metrics in Load Testing

|

Metric |

Description |

|

Response Time |

Measures how long the system takes to process requests. |

|

Throughput |

The number of requests processed per second. |

|

Error Rate |

Percentage of failed requests under load. |

|

CPU and Memory Utilization |

Tracks resource consumption under high traffic. |

|

Concurrent Users |

The number of users the system can handle simultaneously. |

Real-World Example: E-Commerce Website Load Testing

? Scenario: A major e-commerce retailer prepares for Black Friday sales by running load testing.

? Test Goal: Ensure the website can handle 500,000 concurrent users without slowdowns.

? Findings: At 400,000 users, response time increased by 60%, and error rates spiked due to database query bottlenecks.

? Solution: Engineers optimized server scaling, caching mechanisms, and database indexing, allowing the site to handle 600,000 users smoothly.

Best Practices for Effective Load Testing

✔ Use the Right Load Testing Tools – Utilize Apache JMeter, LoadRunner, or Gatling for high-traffic simulations.

✔ Monitor Cloud-Based Performance – Test system response in AWS, Google Cloud, or Azure environments.

✔ Automate Load Tests – Integrate continuous performance testing into CI/CD pipelines.

✔ Analyze Historical Data – Compare test results to previous benchmarks for ongoing improvements.

✔ Optimize for Scalability – Implement load balancers, caching strategies, and database tuning to enhance performance.

Final Thoughts on Load Testing

Load testing is essential for ensuring system reliability, scalability, and optimal performance under real-world traffic. By proactively identifying bottlenecks, response time issues, and capacity limitations, organizations can optimize their applications and infrastructure for high demand.

Peak Testing: Assessing System Performance at Maximum Load

What is Peak Testing?

Peak testing is a specialized performance testing method designed to evaluate how a system behaves at its highest anticipated traffic levels. Unlike load testing, which examines system performance under steady expected traffic, peak testing focuses on extreme traffic conditions to ensure that the application remains stable, responsive, and free of performance bottlenecks.

By using cloud-based performance testing tools like BlazeMeter, organizations can simulate real-world peak loads to detect performance degradation, resource utilization inefficiencies, and infrastructure weaknesses before they impact end users.

Key Objectives of Peak Testing

✔ Validate System Stability at Peak Load – Ensures the system remains operational when concurrent users reach maximum levels.

✔ Identify Performance Bottlenecks – Detects slow database queries, CPU overload, and memory exhaustion.

✔ Assess Infrastructure Improvements – Determines whether server scaling and resource allocation need enhancements.

✔ Measure Performance Metrics – Tracks response times, throughput, and system resilience.

✔ Optimize Resource Utilization – Ensures CPU, memory, and network bandwidth are efficiently managed.

How Peak Testing Works

1️⃣ Define Peak Load Scenarios – Identify expected high-traffic periods (e.g., Black Friday, live sports streaming, or tax filing deadlines).

2️⃣ Simulate Peak Load Conditions – Use tools like BlazeMeter for cloud-based performance testing.

3️⃣ Monitor System Behavior – Track response times, throughput, and concurrent user impact.

4️⃣ Analyze Component Testing Results – Assess whether databases, APIs, and caching layers can handle peak demand.

5️⃣ Implement Infrastructure Improvements – Optimize server autoscaling, load balancing, and caching strategies.

Key Performance Metrics in Peak Testing

|

Metric |

Description |

|

Response Time |

Measures how quickly the system processes requests at peak load. |

|

Throughput |

The number of requests handled per second. |

|

Performance Bottlenecks |

Identifies database slowdowns, CPU spikes, and network congestion. |

|

Resource Utilization |

Tracks CPU, memory, and bandwidth consumption under stress. |

|

Infrastructure Improvements |

Evaluates server scaling, load balancing, and failover mechanisms. |

Real-World Example: Streaming Service Peak Testing

? Scenario: A global streaming platform prepares for a high-traffic live sports event.

? Test Goal: Ensure that the system can handle 10 million concurrent users without buffering.

? Findings: At 8 million users, performance degradation occurred due to overloaded content delivery networks (CDNs).

? Solution: Engineers optimized cloud-based performance testing and caching strategies, allowing the platform to support 12 million users smoothly.

Best Practices for Effective Peak Testing

✔ Use Reliable Testing Tools – Leverage BlazeMeter, JMeter, and LoadRunner for accurate simulations.

✔ Perform Cloud-Based Performance Testing – Test how systems react under AWS, Google Cloud, and Azure environments.

✔ Integrate Capacity Testing – Ensure that servers can scale dynamically to meet demand.

✔ Analyze Component Testing Results – Optimize databases, APIs, and backend services.

✔ Optimize Load Balancing Strategies – Distribute traffic efficiently across multiple servers to prevent failures.

Final Thoughts on Peak Testing

Peak testing is essential for high-traffic applications, ensuring that systems remain stable and efficient under maximum load conditions. By identifying performance bottlenecks, optimizing resource utilization, and implementing infrastructure improvements, businesses can prevent outages and deliver a seamless user experience during peak events.

Stress Testing: Pushing Systems Beyond Operational Limits

What is Stress Testing?

Stress testing is a critical performance testing method used to determine a system’s breaking point by exposing it to extreme load conditions. Unlike capacity testing, which assesses how much load a system can handle under normal operations, stress testing evaluates how an application performs when pushed beyond its limits—helping to uncover stability risks, security vulnerabilities, and failure recovery mechanisms.

By simulating Denial of Service (DoS) attacks, high-traffic spikes, and resource exhaustion, stress testing ensures that systems can withstand unexpected demand surges while maintaining reactivity and recoverability in DevOps environments.

Key Objectives of Stress Testing

✔ Identify Breaking Points – Determines the exact point where the system fails due to extreme load.

✔ Assess System Stability – Ensures applications don’t crash under high user demand or prolonged use.

✔ Measure Recoverability – Tests how quickly a system can restore operations and recover data after failure.

✔ Evaluate Security Risks – Simulates Denial of Service (DoS) scenarios to identify potential security issues.

✔ Improve Error Handling – Detects how the system reacts to failures and prevents complete breakdowns.

How Stress Testing Works

1️⃣ Define Stress Conditions – Set extreme conditions, such as maximum concurrent users, transaction volume, or data processing loads.

2️⃣ Simulate Heavy Load – Use DevOps environments to push the system beyond its typical workload.

3️⃣ Monitor System Reactivity – Observe response times, server behavior, and error rates.

4️⃣ Test Data Restoration and Recoverability – Check whether the system can recover lost or corrupted data post-failure.

5️⃣ Analyze Security Vulnerabilities – Identify sensitivity to cyber threats and unexpected breakdowns.

Key Performance Metrics in Stress Testing

|

Metric |

Description |

|

Breaking Point |

Maximum capacity before system failure. |

|

Reactivity |

System response under extreme load. |

|

Recoverability |

How quickly the system recovers after failure. |

|

Error Handling |

System’s ability to manage failures without complete shutdown. |

|

Security Issues |

Identifies vulnerabilities to attacks like DoS. |

|

Stability |

Ensures system remains functional under long-duration stress. |

Real-World Example: FinTech Platform Stress Testing

? Scenario: A digital banking platform prepares for peak transaction loads during economic crises.

? Test Goal: Simulate an extreme load of 5 million simultaneous transactions to evaluate system reactivity and stability.

? Findings: At 3.8 million transactions, error rates increased due to database overload.

? Solution: Engineers implemented load balancing, data restoration strategies, and failover servers to improve recoverability.

Best Practices for Effective Stress Testing

✔ Leverage DevOps Environments – Conduct tests in cloud-based, containerized, and distributed architectures.

✔ Simulate DoS Attacks – Use stress testing tools to test security vulnerabilities and reaction times.

✔ Ensure Rapid Data Restoration – Validate system recoverability after simulated failures.

✔ Monitor System Sensitivity – Analyze how sensitive the application is to sudden user spikes and system failures.

✔ Optimize Error Handling – Implement robust mechanisms for auto-recovery and failover activation.

Final Thoughts on Stress Testing

Stress testing is vital for preventing unexpected system failures and ensuring resilience under extreme load conditions. By identifying breaking points, security issues, and system recovery times, organizations can optimize infrastructure, enhance stability, and mitigate failure risks.

Spike Testing: Evaluating System Stability During Sudden Load Surges

What is Spike Testing?

Spike testing is a specialized performance testing technique that evaluates how a system responds to sudden and extreme increases in traffic or workload. Unlike load testing, which measures performance under gradual traffic increases, spike testing focuses on unexpected surges to determine whether the system remains stable, resilient, and responsive or if it crashes, slows down, or fails.

By identifying bottlenecks, error rates, response times, and resource utilization inefficiencies, spike testing helps organizations prepare for real-world traffic surges caused by events like flash sales, viral content, software releases, and cyberattacks.

Key Objectives of Spike Testing

✔ Evaluate System Resilience – Determines if the system can handle sudden spikes in traffic without failures.

✔ Monitor Response Times – Measures how quickly the system processes user requests under unexpected load surges.

✔ Identify Bottlenecks and Error Rates – Detects performance degradation, database failures, and application slowdowns.

✔ Assess System Recovery Time – Tests how efficiently the system stabilizes after a traffic surge subsides.

✔ Optimize Resource Utilization – Ensures that CPU, memory, and bandwidth are efficiently allocated under high stress.

How Spike Testing Works

1️⃣ Define Load Spike Conditions – Identify realistic traffic surge scenarios, such as a sudden increase from 1,000 to 100,000 users in seconds.

2️⃣ Simulate Extreme Load Spikes – Use load testing tools to generate sudden surges of virtual users or transactions.

3️⃣ Monitor System Behavior and Stability – Track resource utilization, error rates, and response times.

4️⃣ Analyze System Recovery – Evaluate how quickly the system returns to normal performance after the spike.

5️⃣ Optimize Infrastructure for Future Surges – Implement autoscaling, caching strategies, and failover mechanisms.

Key Performance Metrics in Spike Testing

|

Metric |

Description |

|

Response Times |

Measures how fast the system processes requests under load. |

|

Error Rates |

Tracks failed transactions due to overload. |

|

Recovery Time |

Assesses how quickly the system stabilizes after the spike. |

|

System Stability |

Ensures that the application remains operational despite load surges. |

|

Resource Utilization |

Analyzes CPU, memory, and network usage during peak load. |

Real-World Example: Streaming Service Spike Testing

? Scenario: A live-streaming platform prepares for a major eSports tournament expected to attract millions of viewers in seconds.

? Test Goal: Simulate a traffic surge from 500,000 to 5 million concurrent users within 60 seconds.

? Findings: At 3 million users, database queries slowed, increasing response times by 70%.

? Solution: Engineers optimized load balancing, autoscaling, and caching mechanisms, enabling the system to handle 6 million users without slowdowns.

Best Practices for Effective Spike Testing

✔ Use Advanced Load Testing Tools – Leverage JMeter, LoadRunner, and cloud-based solutions for accurate simulations.

✔ Ensure System Resilience – Implement autoscaling, database sharding, and failover servers to handle traffic spikes.

✔ Monitor System Recovery Time – Analyze how fast the system returns to normal performance after extreme load conditions.

✔ Optimize Resource Allocation – Improve CPU, memory, and network bandwidth management for better efficiency.

✔ Test Multiple Spike Scenarios – Simulate both short, extreme surges and prolonged spikes to evaluate overall stability.

Final Thoughts on Spike Testing

Spike testing is essential for preventing system crashes, slowdowns, and failures during unexpected traffic surges. By identifying performance bottlenecks, optimizing resource utilization, and improving system resilience, businesses can ensure that their applications remain stable and responsive during high-demand events.

Soak Testing: Evaluating System Stability Under Sustained Load

What is Soak Testing?

Soak testing, also known as longevity testing, assesses how a system performs under sustained load for an extended period. Unlike load testing, which focuses on short bursts of traffic, soak testing helps identify performance issues, memory leaks, and system degradation over time.

By simulating real user behavior in a realistic test environment, soak testing ensures that applications maintain reliability, stability, and efficiency even after prolonged usage.

Key Objectives of Soak Testing

✔ Validate System Stability Over Time – Ensures the system remains operational and responsive under sustained load.

✔ Detect Performance Issues – Identifies memory leaks, slow response times, and resource depletion that may not appear in short-term tests.

✔ Assess Reliability and Longevity – Confirms that the system functions without crashes or slowdowns after continuous use.

✔ Optimize Resource Utilization – Monitors CPU, memory, and network bandwidth consumption over extended periods.

✔ Enhance Scalability and Infrastructure Resilience – Ensures cloud-based and on-premises systems can handle continuous demand without failure.

How Soak Testing Works

1️⃣ Define Test Duration – Simulate traffic patterns over hours, days, or weeks.

2️⃣ Create a Realistic Test Environment – Use continuous testing practices to mimic real-world usage.

3️⃣ Apply a Sustained Load – Run tests with consistent traffic levels to monitor system stability.

4️⃣ Monitor Performance Metrics – Use monitoring tools to track response times, error rates, and memory consumption.

5️⃣ Analyze System Degradation – Identify slowdowns, reliability issues, and performance bottlenecks.

Key Performance Metrics in Soak Testing

|

Metric |

Description |

|

System Stability |

Ensures the application remains functional over long durations. |

|

Performance Issues |

Detects gradual slowdowns, resource leaks, and inefficiencies. |

|

Reliability |

Confirms that the system maintains consistent performance under sustained load. |

|

Resource Utilization |

Tracks CPU, memory, and disk usage over time. |

|

Real User Behavior |

Simulates authentic user interactions in a prolonged test. |

Real-World Example: E-Commerce Platform Soak Testing

? Scenario: A global e-commerce website runs soak testing to ensure stability during holiday sales.

? Test Goal: Simulate high transaction volumes over 14 days to detect performance degradation.

? Findings: After 10 days, memory leaks caused response times to slow by 30%.

? Solution: Engineers optimized garbage collection, database queries, and caching mechanisms to maintain peak performance.

Best Practices for Effective Soak Testing

✔ Use Automated Monitoring Tools – Leverage tools like New Relic, Dynatrace, and JMeter for real-time analysis.

✔ Test Under Realistic Conditions – Ensure the test reflects actual user behavior and workloads.

✔ Run Tests for Extended Periods – Conduct tests for days or weeks to detect long-term performance issues.

✔ Track Stability Trends – Identify patterns in response times, CPU usage, and system efficiency over time.

✔ Optimize Resource Allocation – Adjust autoscaling, caching strategies, and database indexing for long-term efficiency.

Final Thoughts on Soak Testing

Soak testing is essential for ensuring long-term system stability, detecting slow performance degradation, and optimizing resource utilization. By simulating real user behavior in a realistic test environment, organizations can prevent unexpected failures and deliver a seamless user experience over extended periods.

Scalability Testing: Evaluating a System’s Ability to Handle Increased Demand

What is Scalability Testing?

Scalability testing is a critical performance evaluation process that measures a system’s ability to scale up or down in response to increased user demand, transaction loads, and data processing requirements. By assessing horizontal scaling, vertical scaling, and cloud infrastructure elasticity, organizations can ensure that their systems remain high-performing, responsive, and efficient under growing workloads.

By identifying performance bottlenecks, optimizing autoscaling mechanisms, and fine-tuning load balancing, scalability testing enables businesses to provide seamless user experiences without downtime or slowdowns.

Key Objectives of Scalability Testing

✔ Assess Autoscaling Capabilities – Ensures automatic resource allocation to handle peak traffic efficiently.

✔ Measure Load Time Benchmarks – Tracks response times and latency benchmarks as demand increases.

✔ Optimize Load Balancing – Evaluates how well traffic is distributed across multiple servers.

✔ Validate Database Sharding Efficiency – Ensures large datasets are effectively partitioned to maintain fast query execution.

✔ Identify Performance Bottlenecks – Detects CPU overload, memory saturation, and inefficient resource utilization.

✔ Test Horizontal vs. Vertical Scaling – Compares the efficiency of server scaling strategies for improved system performance.

How Scalability Testing Works

1️⃣ Define Scaling Scenarios – Establish test conditions for sudden traffic surges, long-term growth, and seasonal peaks.

2️⃣ Perform Load Testing – Simulate realistic user loads and measure system response.

3️⃣ Monitor Cloud Infrastructure Performance – Evaluate autoscaling, load balancing, and database sharding.

4️⃣ Analyze Resource Utilization – Track CPU, memory, and network bandwidth consumption under varying workloads.

5️⃣ Optimize Server Scaling Strategies – Implement horizontal scaling, vertical scaling, and resource upgrading to improve performance.

Key Performance Metrics in Scalability Testing

|

Metric |

Description |

|

Load Time Benchmarks |

Measures response times as system demand increases. |

|

Performance Bottlenecks |

Identifies hardware, database, and network slowdowns. |

|

Autoscaling Efficiency |

Evaluates how quickly resources scale up or down. |

|

Horizontal vs. Vertical Scaling |

Compares adding more servers vs. upgrading existing ones. |

|

Database Sharding Performance |

Tests data distribution efficiency across multiple servers. |

Real-World Example: E-Commerce Platform Scalability Testing

? Scenario: A global e-commerce company prepares for a holiday shopping event with 10x traffic growth.

? Test Goal: Ensure cloud infrastructure and autoscaling mechanisms can handle the surge without slowdowns.

? Findings: Load balancing inefficiencies caused high latency and increased response times at peak demand.

? Solution: Engineers optimized database sharding, server scaling, and resource upgrading, improving system capacity by 300%.

Best Practices for Effective Scalability Testing

✔ Use Cloud-Based Load Testing Tools – Leverage AWS, Google Cloud, and Azure testing environments for real-world accuracy.

✔ Implement Continuous Monitoring – Track autoscaling performance, latency benchmarks, and resource usage in real-time.

✔ Optimize Load Balancing Strategies – Ensure traffic is evenly distributed across multiple servers.

✔ Test Horizontal and Vertical Scaling – Determine whether adding more servers or upgrading existing ones is more effective.

✔ Improve Database Performance – Use database sharding and indexing to prevent slow queries during peak loads.

Final Thoughts on Scalability Testing

Scalability testing is essential for businesses that expect fluctuating demand, rapid growth, and high availability requirements. By optimizing autoscaling, load balancing, and database performance, companies can build resilient, high-performing applications that seamlessly adapt to changing workloads.

Volume Testing: Evaluating System Performance Under Large Data Loads

What is Volume Testing?

Volume testing is a critical performance evaluation technique that measures how well a system handles large volumes of data to ensure efficient data processing, storage, and retrieval. Unlike load testing, which focuses on user traffic, volume testing assesses database performance, disk I/O, and query optimization under bulk operations.

By identifying performance bottlenecks, indexing inefficiencies, and capacity planning issues, volume testing ensures that applications can scale effectively while maintaining fast response times and high data throughput.

Key Objectives of Volume Testing

✔ Assess Data Throughput – Ensures the system can handle large datasets efficiently without delays.

✔ Identify Performance Bottlenecks – Detects slow query execution, inefficient indexing, and disk I/O limitations.

✔ Optimize Database Indexing Efficiency – Improves how data is stored, accessed, and retrieved under heavy loads.

✔ Measure Scalability and Capacity Planning – Tests whether the system can scale storage and processing power dynamically.

✔ Ensure Stability Under Bulk Operations – Evaluates how well the system processes large transactions and data imports.

How Volume Testing Works

1️⃣ Define Test Scenarios – Establish conditions for high-volume data ingestion, retrieval, and processing.

2️⃣ Simulate Bulk Operations – Perform flood testing by injecting massive data loads into the system.

3️⃣ Monitor Database Performance – Track query execution times, indexing efficiency, and data processing speed.

4️⃣ Analyze Disk I/O and System Throughput – Measure how effectively the system handles large-scale read/write operations.

5️⃣ Optimize for Scalability – Improve query optimization, indexing, and storage management to enhance performance.

Key Performance Metrics in Volume Testing

|

Metric |

Description |

|

Data Throughput |

Measures how fast the system processes bulk data. |

|

Database Performance |

Evaluates query speed, indexing efficiency, and read/write operations. |

|

Disk I/O Performance |

Tracks how well the system manages storage and retrieval under heavy loads. |

|

Capacity Planning |

Assesses whether the system can scale for future data growth. |

|

Query Optimization |

Ensures database queries remain efficient even with large datasets. |

Real-World Example: Financial Institution Volume Testing

? Scenario: A major bank implements volume testing to ensure its database can process millions of daily transactions without delays.

? Test Goal: Simulate bulk transactions, high-volume queries, and real-time data retrieval.

? Findings: At 10 million records, query performance degraded by 40% due to inefficient indexing.

? Solution: Engineers optimized database indexing, disk I/O, and query optimization, reducing response times by 60%.

Best Practices for Effective Volume Testing

✔ Use Advanced Database Testing Tools – Leverage SQL Profiler, Apache JMeter, and LoadRunner to monitor database performance.

✔ Simulate Real-World Bulk Operations – Test large-scale transactions, data imports, and backups under realistic conditions.

✔ Analyze Database Indexing Efficiency – Optimize indexing strategies to prevent slow query execution.

✔ Monitor Disk I/O Performance – Ensure the system can handle high-speed read/write operations without failures.

✔ Plan for Scalability – Implement capacity planning strategies to prepare for future data growth.

Final Thoughts on Volume Testing

Volume testing is essential for data-intensive applications, ensuring that systems can handle large datasets, optimize performance, and prevent processing slowdowns. By focusing on query optimization, database indexing, and storage management, businesses can maintain high efficiency and scalability even as data volumes grow.

Resilience Testing: Ensuring System Recovery and Stability Under Failures

What is Resilience Testing?

Resilience testing is a critical performance evaluation method that assesses a system’s ability to recover from failures and continue operating under adverse conditions. Unlike stress testing, which identifies breaking points, resilience testing focuses on error handling, failover mechanisms, and system stability to ensure that applications remain functional even in unexpected failures.

By incorporating reliability testing, recovery testing, and redundancy measures, resilience testing helps businesses minimize downtime, protect data integrity, and maintain seamless user experiences even when systems face disruptions.

Key Objectives of Resilience Testing

✔ Evaluate Failover Mechanisms – Ensures that backup systems and redundancy solutions activate when a failure occurs.

✔ Measure Recovery Time – Determines how quickly a system recovers after a crash, hardware failure, or software malfunction.

✔ Test Error Handling Efficiency – Assesses how well the system manages and logs failures without data loss.

✔ Ensure System Stability and Health – Monitors whether applications can remain operational under fluctuating conditions.

✔ Validate Regression Testing for Post-Recovery Stability – Confirms that after recovery, the system operates without performance degradation.

How Resilience Testing Works

1️⃣ Define Failure Scenarios – Simulate server crashes, database failures, or network disruptions.

2️⃣ Trigger System Failures – Introduce planned disruptions to test system health and recovery mechanisms.

3️⃣ Monitor Recovery Testing Metrics – Track recovery time, failover efficiency, and data consistency.

4️⃣ Analyze Regression Testing Results – Ensure the system remains stable and reliable post-recovery.

5️⃣ Optimize for System Stability – Implement better redundancy, enhanced error handling, and disaster recovery protocols.

Key Performance Metrics in Resilience Testing

|

Metric |

Description |

|

Recovery Time (RTO) |

Measures how quickly a system restores normal functionality. |

|

System Health |

Ensures the application remains stable after recovery. |

|

Failover Mechanisms |

Evaluates automatic switchovers to backup resources. |

|

Error Handling Efficiency |

Tracks how well the system detects, logs, and responds to failures. |

|

Regression Testing Results |

Confirms that recovery doesn’t introduce new issues or performance bottlenecks. |

Real-World Example: Banking System Resilience Testing

? Scenario: A global banking institution runs resilience testing to ensure secure transaction processing even during server failures.

? Test Goal: Simulate database crashes, network failures, and infrastructure disruptions to evaluate system recovery.

? Findings: At peak transaction loads, failover mechanisms took 6 minutes to activate, causing service delays.

? Solution: Engineers optimized redundancy strategies and system health monitoring, reducing recovery time to under 30 seconds.

Best Practices for Effective Resilience Testing

✔ Implement Automated Recovery Testing – Use DevOps automation tools to test failover mechanisms in real time.

✔ Monitor System Health Continuously – Use tools like New Relic, Datadog, and Dynatrace for real-time system stability analysis.

✔ Perform Regular Regression Testing – Ensure new updates do not weaken system resilience post-recovery.

✔ Test Under Multiple Failure Conditions – Simulate hardware failures, software bugs, and network outages to build a stronger recovery plan.

✔ Optimize Recovery Time – Fine-tune backup and disaster recovery solutions to ensure minimal downtime and rapid failover activation.

Final Thoughts on Resilience Testing

Resilience testing is also one of essential performance test for business continuity, disaster recovery, and system stability. By identifying weak failover mechanisms, optimizing recovery strategies, and improving system health monitoring, organizations can ensure minimal downtime and uninterrupted service, even during failures.

In Conclusion on Performance Test

Performance testing is essential for ensuring that applications are stable, scalable, and resilient under various conditions. Each type of performance test plays a crucial role in identifying potential bottlenecks, security vulnerabilities, and system inefficiencies before software deployment.